Counterfactuals: Hoe AI beslissingen begrijpelijk maken

/

Counterfactuals: Hoe AI beslissingen begrijpelijk maken

Wat is een counterfactual? Nu een van de core concepten in het veld van ‘Explainable artificial intelligence (XAI) is oorspronkelijk afkomstig uit filosofische context. The foundation van counterfactual denken dateert terug naar de filosofische vraag rond oorzaak en gevolg. David Hume had hierbij een van de eerste ‘counterfactual definition’ van oorzaak-gevolg die verder door David Lewis is gevormd tot ‘conditionals’ waarbij de essentie was dat een oorzaak iets moest zijn ‘dat een verschil maakte’ tot een oplossing.

In het veld van XAI wordt het als vandaag gebruikt om een hypothetische situatie of outcome te bekijken die niet voorkwam maar wel kon voorkomen onder andere omstandigheden. In machine learning context heeft een counterfactual explanation (CFE) een specifiek nut door het aanhalen van de volgende vraag: ‘Welke minimale verandering in de input zou resulteren in een andere voorspelling”

In deze blogpost gaan we verder na waarom deze vraag zo nuttig is, en welke voordelen het heeft om te stellen in de context van AI en ML alsook bekijken we het gebruik ervan binnen nieuwere fields zoals LLM’s.

Main section

Kerngegevens

/

Counterfactuals tonen hoe beslissingen veranderd kunnen worden.

/

LLM-counterfactuals zijn nog volop in ontwikkeling.

/

Rashomon effect: meerdere juiste antwoorden mogelijk.

Using Counterfactuals in smart systems

Why use counterfactuals at all? Transparency trust and recourse

AI-modellen worden steeds complexer en verschillende manieren worden bekeken om meer inzicht in de black box models te krijgen. Het is niet enkel belangrijk, en ethisch correct, om te kunnen aantonen waarom een model een beslissing maakt. Volgens de EU GDPR voor AI, ook al is dit nog wat ambigue (Recital 71 & Artikel 22), is het belangrijk om de persoon het recht te geven een uitleg te krijgen na een geautomatiseerde beslissing.

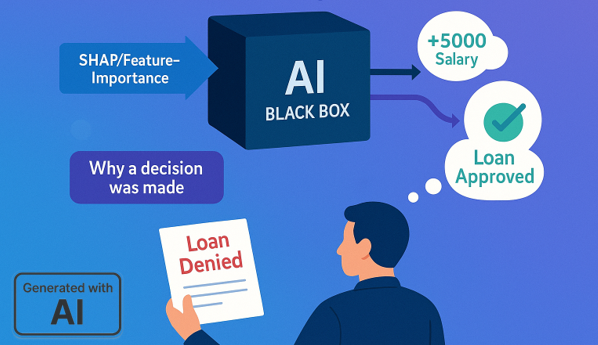

Een voordeel van CFE over andere explainable technieken is de begrijpbaarheid en menselijkheid ervan. In plaats van aan te tonen welke features het meeste hebben bijgedragen aan een beslissing, geven ze een ‘voorgeschreven’ pad voor de gebruiker/developer om naar een gewenste output te gaan. Een klassiek voorbeeld hiervan is in de context van een lening.

Als een lening ‘Geweigerd’ is wat zou een gebruiker dan wel moeten hebben of doen om wel de lening toegekend te krijgen? Een andere verklaring naast CFE zou kunnen opmerken dat het weigeren kwam door een lage income en veel openstaande leningen. Het zegt al iets over waar het probleem is al is het niet tastbaar hoe groot het loon moet zijn of wat de maximale openstaande leningen mogen zijn. Een Counterfactual gaat daarentegen een tastbare verklaring geven zoals bijvoorbeeld: ‘Als je loon hoger is dan 5000 en je openstaande schulden lager dan 200 dan zou de lening toegekend geweest zijn.

Daarom zijn counterfactuals goed om een verklaring te geven van modellen.

The Qualities and Challenges of Effective Counterfactuals

Een counterfactual zou steeds waardevol moeten zijn en niet zorgen tot verwarring. Hiervoor zijn volgende 3 aspecten belangrijk:

- Gelijkenis: Een CFE zou steeds zo gelijk mogelijk moeten zijn aan de originele input, dit wil dus zeggen dat er een minimaal aantal aanpassingen van features mogen zijn. Dit helpt voor de duiding van het model transparant en begrijpbaar te houden.

- Haalbaarheid: De aanpassing moet ook realistisch zijn. Ga niet een “wat als”-scenario maken voor 120-jarige bijvoorbeeld.

- Diversiteit: Verschillende diverse alternatieven kunnen complexere modellen duidelijker in kaart brengen.

Zelfs al volg je deze regels zijn er ook nog steeds wat onduidelijkheden die counterfactuals kunnen brengen. Hiervoor wijs ik terug naar een stukje ethiek en psychologie, dit met de ‘causality paradox’. Een AI-systeem toont associaties en correlatie aan in data, niet direct een oorzaak-gevolg. Dus als een AI-systeem het volgende als duiding geeft op een afkeuring van een lening: ‘Je loon zou hoger moeten zijn’ . Een foute conclusie zou zijn dat een hoog loon steeds ervoor zal zorgen dat je een lening zal krijgen. Het model selecteert een oplossing over een statistische correlatie terwijl een mens een oorzaak-gevolg ziet. Er moet dus steeds responsible omgegaan worden bij het geven van counterfactuals en misinformatie vermeden worden door disclaimers of andere.

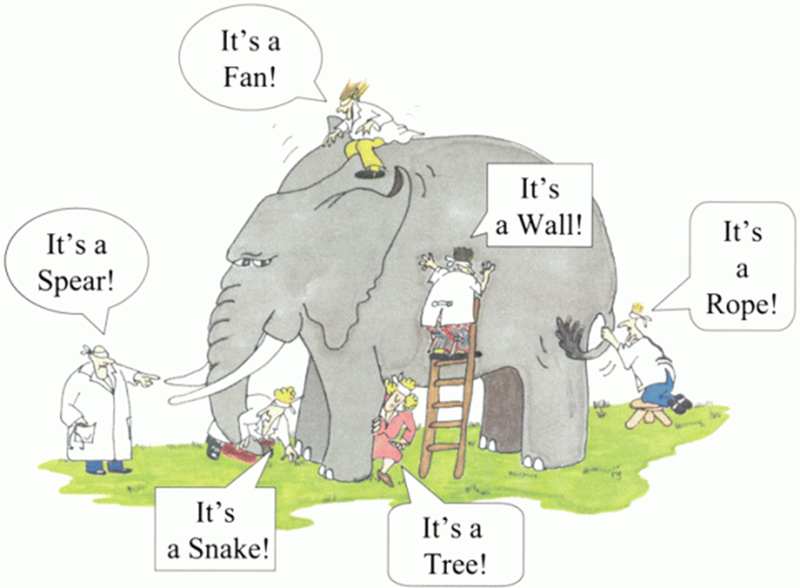

Er is ook nog een andere challenge die terugkomt in de literatuur: “Rashomon Effect” dit duidt erop dat er meerdere correcte CFE kunnen zijn die beide correct zijn. Dit is zeker niet fout, het is mogelijk dat een aanpassing van feature A hetzelfde resultaat heeft dan feature B of omgekeerd. Technieken zoals DiCE kunnen hiervoor ingezet worden. Het doel is niet om de counterfactual te vinden maar een lijst van meest geschikte.

Counterfactuals Across the AI Spectrum: A Model-Specific Approach

2.1 Prediction Models and Tabular Data

Bij klassieke predictie modellen zijn counterfactuals heel intuïtief. Het type modellen gaan onder andere over verzekering, diagnose en voorspellingen van energie. Het proces om een counterfactual voor tabel data te vinden gaat meestal door het zoeken naar de kleinste aanpassingen in een input dat de output van het models zou aanpassen. Deze aanpassingen worden dan als een voorstel gegeven.

2.2 Neural Networks and Image Classifiers

Voor neurale netwerken is het genereren van een CFE afhankelijk van het type AI-model. Het zal ook vaak heel complexe wiskunde vragen door de grotere complexiteit van het model. Er is hier wel actief onderzoek naar om frameworks en methodes te bepalen om counterfactuals voor deze type modellen te bekomen.

Als we gaan kijken naar het subfield van image classificatie is er nog een andere uitdaging. Het aanpassen van een pixelwaarde is misschien voldoende voor een classifier fout te laten werken, al is dit niet iets realistisch voor de mens en de omgeving. Een nieuwe generatie van technieken bekijkt GAN’s en Difussion modellen om counterfactuals te generen om zo een classifier te gaan testen en tegelijkertijd realistische beelden ervoor te gebruiken. Een systeem zou bijvoorbeeld een weergave kunnen genereren voor een thoraxfoto die geclassificeerd is als "longontsteking" door te laten zien hoe de röntgenfoto eruit zou hebben gezien als de patiënt niet aan de ziekte had geleden. Dit zou meerwaarde kunnen hebben dan een simpele grafiek die het verschil aanduidt.

2.3 Counterfactuals and Large Language Models (LLMs)

De toepassing van counterfactuals op Large Language Models (LLM's) is een snel evoluerend gebied binnen XAI. Het doel is te begrijpen hoe een kleine wijziging in een invoerprompt of context tot een andere output zou hebben geleid. Dit verschilt toch wel van de traditionele counterfactual-generatie. Deze is afhankelijk is van een vooraf gedefinieerd model en een zoekproces.

In plaats van een vooraf gedefinieerd model en zoekproces wordt bij LLM’s vaak gewerkt met zogenaamde self-generated counterfactual explanations (SCE’s).[NS1] Hierbij wordt het model zelf gevraagd om mogelijke alternatieven te formuleren. Dat gebeurt meestal via een iteratief proces: een eerste prompt wordt aan het model gegeven, waarna een output volgt. Vervolgens wordt een nieuwe prompt opgesteld die het model aanzet om een aangepaste input (de counterfactual) te bedenken die zou resulteren in een andere output. Dit kan zowel via “open prompting” als via “rationale-based prompting”, waarbij het model eerst de belangrijkste factoren in de oorspronkelijke invoer benoemt en dan een mogelijke wijziging voorstelt.

Onderzoek toont echter dat deze aanpak nog beperkingen heeft. LLM’s zijn bijzonder goed in het genereren van verklaringen, maar die blijken vaak niet helemaal betrouwbaar of trouw aan het werkelijke proces dat achter de beslissing zit. De kwaliteit van zulke verklaringen varieert sterk per taak en modelarchitectuur. Het risico is dat de uitleg eerder een weerspiegeling is van de opgebouwde parametrische kennis van het model, en niet van de echte redenering die geleid heeft tot de output. Voor de gebruiker kan de uitleg daardoor aannemelijk lijken, maar toch misleidend zijn. Dit benadrukt dat er nog verdere methodologische ontwikkeling nodig is om counterfactuals in LLM’s niet enkel plausibel, maar ook consistent en correct te maken.

III. A Toolkit for Counterfactual Generation: Commercial and Open-Source Solutions

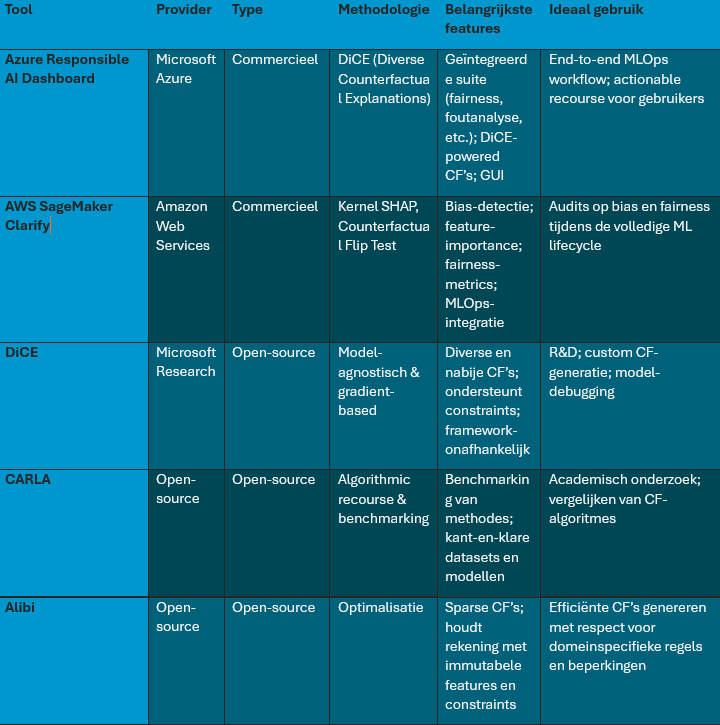

De toepassing van counterfactuals wordt steeds meer ondersteund door een groeiend ecosysteem van commerciële producten en open-source bibliotheken. Deze tools helpen bij het genereren, evalueren en toepassen van counterfactual verklaringen in uiteenlopende contexten.

3.1 Major Cloud Provider Offerings

Grote cloudplatformen hebben explainability-functionaliteiten geïntegreerd in hun diensten, waardoor gebruikers vlot toegang krijgen tot counterfactuals.

Microsoft Azure

Het Microsoft Azure Responsible AI Dashboard biedt een geïntegreerde suite van tools voor eerlijkheid, interpretatie en foutanalyse. Een belangrijke component is de What-if counterfactuals functie, gebaseerd op de open-source DiCE library. Hiermee kunnen gebruikers bepalen welke features aangepast mogen worden en binnen welke grenzen. Zo ontstaan geldige en logische verklaringen die bruikbaar zijn voor zowel model-debugging als voor het bieden van concrete aanbevelingen aan eindgebruikers.

Google Cloud

De Google What-If Tool is een interactieve, model-onafhankelijke omgeving waarin gebruikers kunnen experimenteren met counterfactuals. Via een visuele interface kan eenvoudig worden nagegaan hoe veranderingen in invoerwaarden de voorspelling beïnvloeden.

Amazon Web Services (AWS)

Binnen Amazon SageMaker is er SageMaker Clarify, een toolkit gericht op bias-detectie en uitlegbaarheid. Een opvallende functie is de Counterfactual Flip Test, die nagaat of voorspellingen “omklappen” tussen verschillende beschermde groepen. Hoewel de nadruk op bias ligt, sluiten de onderliggende technieken voor transparantie en feature-importance, zoals Kernel SHAP, nauw aan bij counterfactual-concepten.

3.2 Leading Open-Source Libraries

Voor wie liever zelf meer controle heeft of maatwerk nodig heeft, bestaan er krachtige open-source oplossingen.

- DiCE (Diverse Counterfactual Explanations)

Een toonaangevende library ontwikkeld door Microsoft. DiCE kan diverse en realistische counterfactuals genereren voor uiteenlopende modellen. Het ondersteunt zowel black-box benaderingen (random search, genetic search) als gradient-based methodes voor differentiabele modellen (TensorFlow, PyTorch). Bovendien zijn constraints instelbaar zodat de resultaten haalbaar blijven. - CARLA (Counterfactual and Recourse Library)

Een Python-library gericht op het vergelijken en benchmarken van verschillende counterfactual-methodes. Met kant-en-klare datasets en modellen maakt CARLA het eenvoudiger om prestaties en aannames van algoritmes te testen. - Alibi

Een bredere library die meerdere XAI-methodes aanbiedt. Het counterfactual-gedeelte focust op sparse en in-distribution verklaringen, met ondersteuning voor immutabele features (bv. leeftijd, geslacht) die niet aangepast mogen worden.

3.3 The Toolkit for Explanations: Convergence and Complementarity

Geen enkele explainability-techniek geeft een volledig beeld van hoe een model werkt. De sterkste aanpak is vaak een combinatie van methodes. Counterfactuals zijn ideaal om actionable recourse te bieden, terwijl technieken als SHAP inzicht geven in de relatieve bijdrage van elke feature. Samen leveren ze een meer holistische kijk: SHAP vertelt waarom een beslissing genomen werd, counterfactuals tonen hoe die beslissing veranderd kan worden. Deze complementariteit, zoals geïllustreerd in Azure’s Responsible AI Dashboard, vormt een robuuste toolkit om modellen te debuggen, transparant te maken en vertrouwen bij gebruikers te versterken.

Bottom section

Counterfactuals als brug tussen mens en model

Counterfactual verklaringen nemen een unieke plaats in binnen het bredere veld van explainable AI. Waar veel technieken vooral focussen op het blootleggen van het belang van features, bieden counterfactuals een pad naar actie: ze tonen hoe een uitkomst veranderd had kunnen worden. Dat maakt ze bijzonder krachtig om modellen niet alleen transparanter te maken, maar ook bruikbaar en menselijker voor eindgebruikers.

Zoals we hebben gezien, spelen counterfactuals een rol over het volledige spectrum van AI-modellen: van klassieke tabulaire voorspellingen tot complexe neurale netwerken en de nieuwste generatie LLM’s. Tegelijk blijft het belangrijk om rekening te houden met hun beperkingen en uitdagingen, zoals betrouwbaarheid, haalbaarheid en het correct interpreteren van oorzaak versus correlatie. De opkomst van commerciële en open-source toolkits toont echter duidelijk dat de praktijk zich steeds verder ontwikkelt en dat er een rijk ecosysteem ontstaat om deze technieken effectief in te zetten.

Counterfactuals zijn dus geen wondermiddel, maar wel een essentieel onderdeel van de toolbox voor iedereen die bezig is met transparantie, fairness en vertrouwen in AI. Door ze te combineren met andere methodes, ontstaat er een vollediger beeld dat zowel ontwikkelaars, onderzoekers als eindgebruikers ten goede komt.

Heb je vragen of wil je hierover verder in gesprek gaan? Onze onderzoeksgroep werkt actief rond MLOps, Agents en LLM’s en staat steeds open om ervaringen te delen en te helpen waar mogelijk.

Sources:

https://arxiv.org/html/2505.11839v1#:~:text=Counterfactual%20reasoning%20has%20emerged%20as,reliability%20of%20model%20decision%2Dmaking.

https://medium.com/data-science/counterfactuals-in-language-ai-956673049b64

https://www.europarl.europa.eu/RegData/etudes/STUD/2020/641530/EPRS_STU(2020)641530_EN.pdf

https://christophm.github.io/interpretable-ml-book/counterfactual.html

https://github.com/uiuc-focal-lab/LLMCert-B

https://openreview.net/pdf?id=HQHnhVQznF

https://pmc.ncbi.nlm.nih.gov/articles/PMC9768678/

https://pmc.ncbi.nlm.nih.gov/articles/PMC9024220/

https://arxiv.org/pdf/2305.11997

https://facctconference.org/static/docs/facct2025-206archivalpdfs/facct2025-final80-acmpaginated.pdf

https://www.microsoft.com/en-us/research/blog/open-source-library-provides-explanation-for-machine-learning-through-diverse-counterfactuals/

Contributors

Authors

/

Jens Krijgsman, Automation & AI researcher, Teamlead

/

Nathan Segers, Lecturer XR and MLOps

Want to know more about our team?

Visit the team page