Introductie AI Agents: Werking, Terminologie en Ontwikkelingen

/

Introductie AI Agents: Werking, Terminologie en Ontwikkelingen

AI Agents, een term die nu steeds meer bij iedere persoon in de mond wordt gelegd als het gaat over automatisatie. Maar wat zijn nu deze Agents, wat houden ze in, en hoe kunnen ze goed ingezet worden. Dit is het eerste deel van deze blogpost. Verder nemen we ook een kijkje binnen een agent en duiden we ook op enkele termen die in de context van Agents naar voor komen (dit is een beetje een slechte woordgrap, als je die nog niet begrijpt dan zal deze straks wel duidelijk worden!)

Na deze uitleg over de technologieën achter agents, blikken we terug op hoe Agents in 2025 zijn geëvolueerd van ‘slimme chatbot’ naar toch wel ‘bruikbare Agent’. Om af te sluiten kijken we al eens naar wat Agents in verder kunnen betekenen of toch waar wij verder op inzetten.

Main section

Kerngegevens

/

Agents nemen beslissingen, workflows volgen scripts

/

LLM + Tools + Context = Autonomous Agent

/

MCP is de USB-C voor AI-connectiviteit

/

SLMs draaien nu lokaal op je laptop

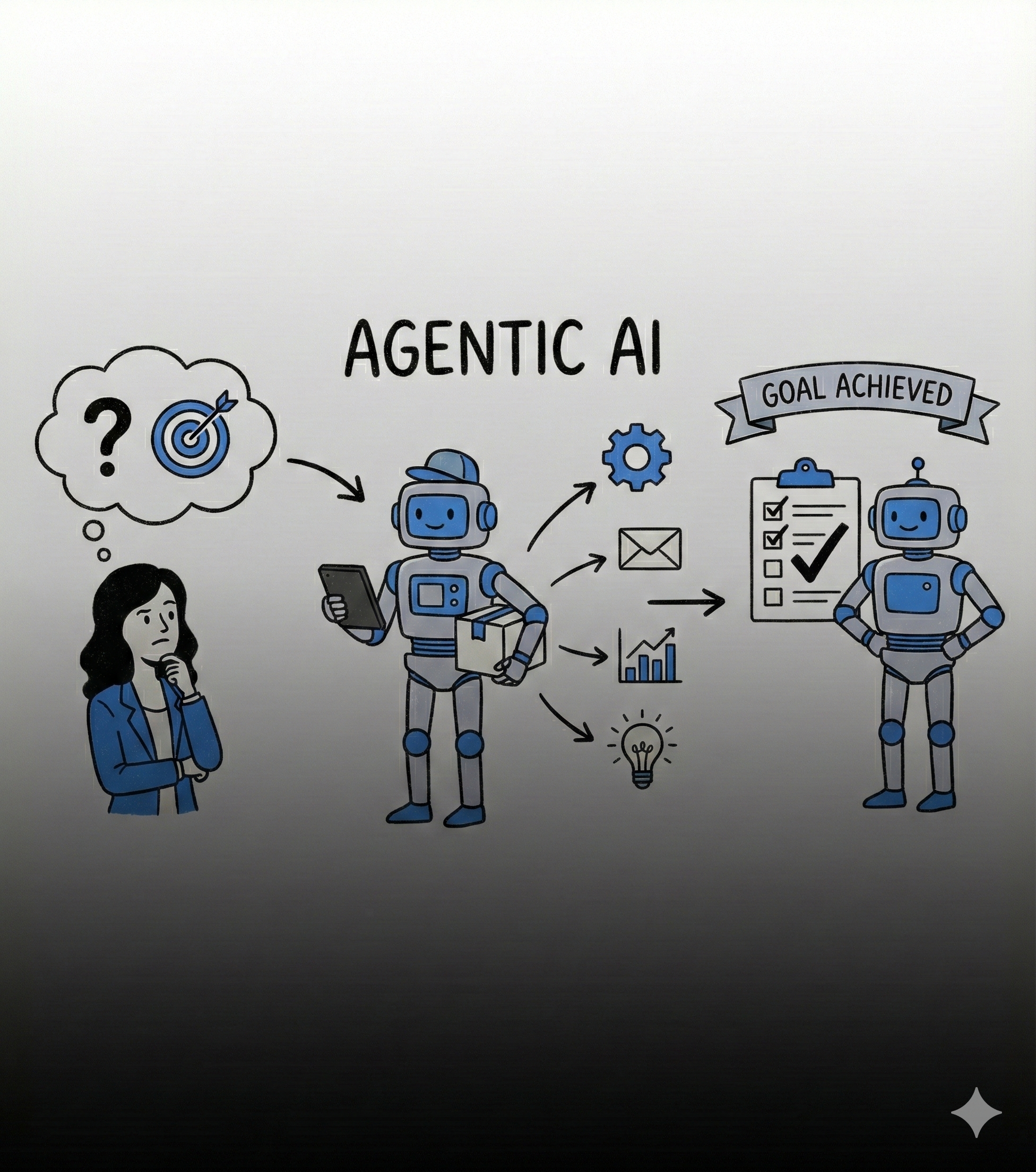

Wat zijn nu Agents

Het eerste punt dat we gaan bekijken is de definitie van het woord ‘Agent’. Voor velen is een Agent een systeem dat op zichzelf actie onderneemt. Natuurlijk is er steeds een soort startpunt of trigger. Dit kan het ontvangen zijn van een mail, een waarde op een sensor die te hoog wordt of een simpele startknop. Je kan je dan de volgende vraag stellen

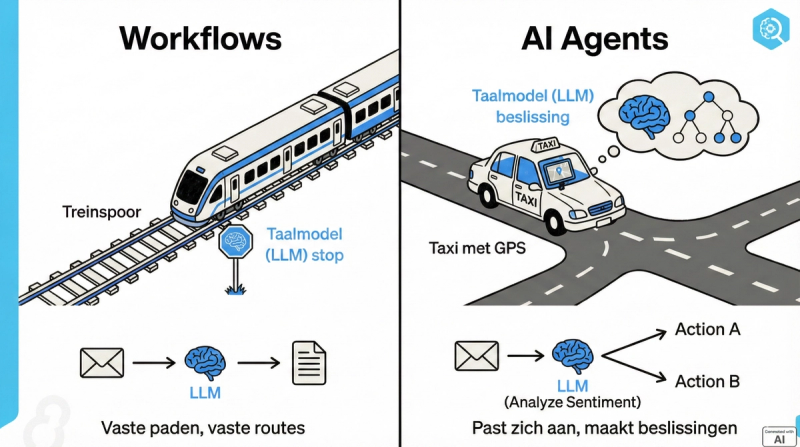

Wat is het verschil tussen Agents en workflows

Ik gebruik hiervoor graag een analogie. Een workflow kan je zien als een soort ‘Treinspoor’. Dit zijn vaste paden die vaste routes volgen. Dit wil niet zeggen dat er geen AI of Taalmodel aan te pas kan komen!

Neem nu bijvoorbeeld het automatisch samenvatten van een email en deze in een document plaatsen. Dit is een voorbeeld van een workflow waar een van de stops een LLM is voor de samenvatting.

Een AI-agent kan je dan eerder zien als een Taxi met een GPS die zich aanpast aan de situatie. Er is niet 1 bepaalde route, hier zal het taalmodel een beslissing maken welke route er zal genomen worden.

Hier kan je denken aan een oplossing die in plaats van een samenvatting van een email een analyse gaat doen of deze een positieve of negatieve mail is en vervolgens zal beslissen een actie de ondernemen (we geven enkel de mogelijkheden of locaties mee, maar niet de paden of sporen)

Hopelijk maakt dit het verschil tussen een Agent en een workflow wat duidelijker. Een Agent zal Waarnemen (informatie verzamelen en verwerken), Plannen (bepalen wat er hierna gebeurt via redenering), Handelen (uitvoeren met beschikbare tools), en Leren (aanpassen op basis van resultaten). Dit brengt mij dan direct ook naar

Hoe een Agent nu achterliggend werkt

Hier zie je een voorbeeld van hoe je een Agent kan voorstellen. Een soort Manager die weet welke mensen er in zijn team aanwezig zijn. En bij het krijgen van een opdracht (in dit geval een email schrijven en berekeningen uitvoeren) zal starten met een planning op te stellen om deze vervolgens uit te voeren.

Nu wat als we toch even technisch kijken naar zo een oplossing, wat zit er achter zo een oplossing?

De motor van een Agent is een LLM of large language model of taalmodel. De kracht van een LLM is het voorspellen van woorden. Het probeert eigenlijk je vraag zo goed mogelijk te beantwoorden afhankelijk van de tekst die je meegeeft. Wat heeft dit met een Agents te maken kan je je afvragen, wel, je geeft het taalmodel je vraag en ook een lijst van Tools die het kan gebruiken.

Deze Tools deze kan je zien als functionaliteiten. In het voorbeeld, de rekenmachine, het (ander) taalmodel en de Email client.

Vervolgens gaat het taalmodel zo goed mogelijk proberen inschatten welke tools in welke volgorde nodig zijn om je vraag te beantwoorden en zal deze uitgevoerd worden.

Voor de simpliciteit heb ik even wat meer technische termen weggelaten in de uitleg van een Agent al zou ik nog graag even de focus leggen op enkele

Termen en Richtingen die Agents afgelopen jaar zijn uitgegaan

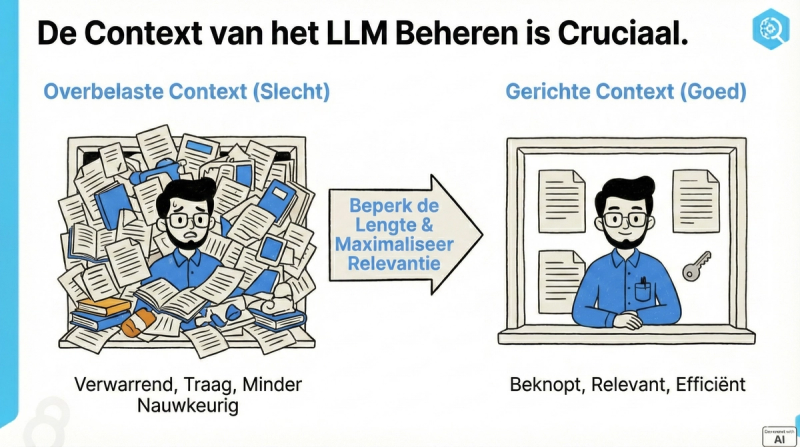

Context engineering

Een eerste term is Context Engineering. Misschien ben je wel vertrouwd met prompt engineering, waarbij je kijkt om een optimale prompt op te bouwen. Het afgelopen Jaar ging alles meer richting context. De context van een model kan je zien als de limiet van het geheugen. Wat steeds belangrijker is voor Agents is het beperken van deze context. Hoe minder woorden gebruikt, hoe lager de kostprijs en meer ‘to the point’ het antwoord zal blijven. (Van hier ook de woordspeling van context in de intro 😅) Het is voor mensen bijvoorbeeld ook makkelijker om details te onthouden van 1 boek in plaats van 20 tegelijk zonder ze door elkaar te halen.

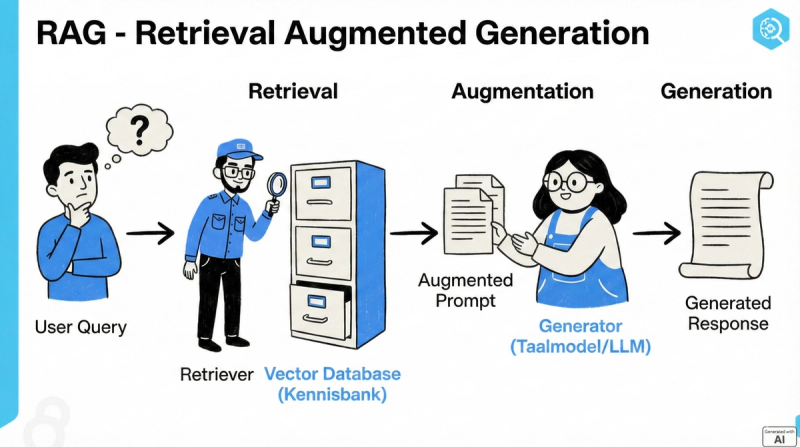

RAG

Een volgende term is RAG of retrieval augmented generation. Ik wil hier niet te technisch op ingaan, je kan dit zien als een manier een taalmodel te gebruiken met de extra limitatie dat het enkel het antwoord uit verschillende bronnen kan nemen. Hiervoor zijn tools van Google Notebook LM een goed voorbeeld. Je kan het zien alsof er een soort selectie wordt gedaan van documenten en data om dit dan mee te geven aan het taalmodel.

MCP

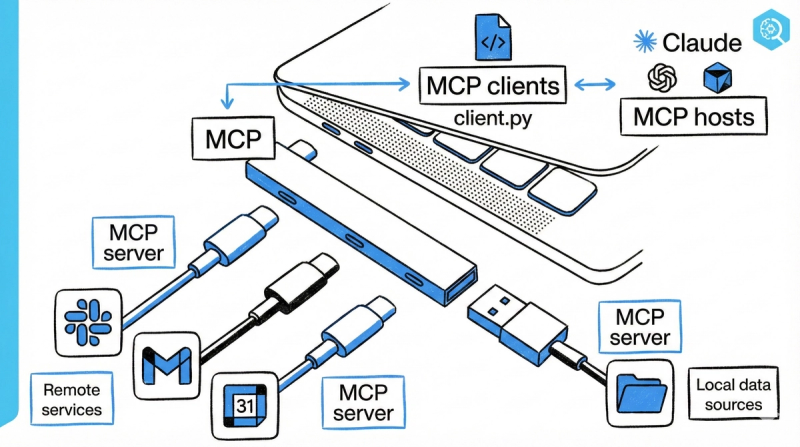

MCP of model context protocol is ook nog een term die afgelopen jaar heel veel terugkwam. MCP is eigenlijk de API of standaard ‘connector’ van tools naar taalmodellen. Dit is oorspronkelijk ontwikkeld door anthropic ( de makers van Claude) en is nu ook opgenomen door al de andere providers (google, grok, openai,..) Je kan het zien als de USB-C voor functionaliteiten aan taalmodellen te koppelen.

SLM

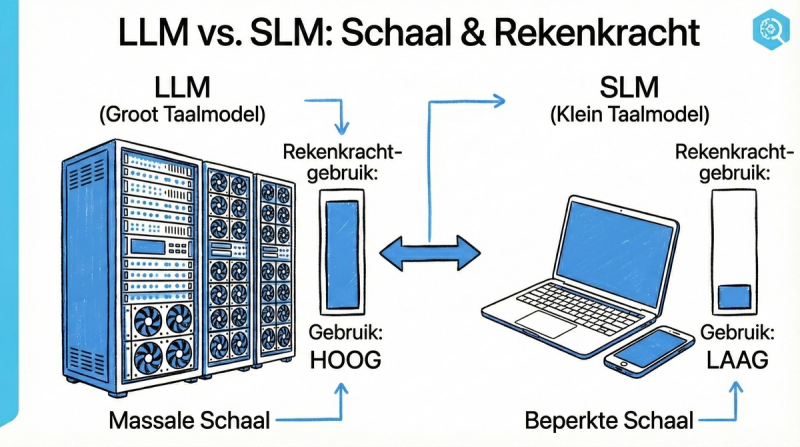

Een volgende die toch wel soms naar boven kwam was SLMs of small language models. Deze hebben nog niet zo veel aandacht gekregen als hun grotere counterpart maar zijn stilletjes aan ook heel wat aan het verbeteren. Dit gaat zelfs zover dat je makkelijk op een laptop zonder GPU (grafische kaart) een taalmodel kunt draaien dat nog begrijpbare output geeft.

Deze technieken duiden op enkele veranderingen op het vlak van Agents en zullen ook naar volgend jaar aan de basis liggen voor verder verbeteringen.

Bottom section

Wat komt er aan?

Nu is de vraag, wat volgt er of waarop in te zetten. Als een onderzoeksgroep in het topic hebben we geen glazen bol. Als je op het internet gaat zoeken kom je alles tegen, het is nu ook een echte hype. Wat ik wel kan meegeven is waar wij verder op inzetten.

Het eerste is lokaal LLMs (alsook SLMs gaan inzetten) we hebben hiervoor ook een aankoop van een AI server gedaan die ons in onze onderzoeksprojecten heel goed van pas zal komen. Dit is zowel interessant om niet afhankelijk te zijn van cloud providers maar ook Open source modellen snel en makkelijk in opstelling in te zetten.

Het volgende waar we op inzetten is het beheren van de context en verder bekijken hoe we betere Agent structuren kunnen opzetten en met elkaar kunnen koppelen. Dit aan de hand met open source, beschikbare software alsook met onze eigen structuren afhankelijk van de projecten. Het verder bekijken van deze koppelingen, structuren en context zal Agents helpen om van een ‘slimme chatbot’ naar toch wel ‘bruikbare Agent’ te gaan.

Als laatste blijven we ook inzetten op het op de voet volgen van de trends en deze verder te documenteren en op te nemen in onze projecten.

Hopelijk heeft het artikel je wat meer bijgebracht over wat AI Agents zijn alsook wat meer context gegeven over wat er nu technisch aan te pas komt. Zijn er onduidelijkheden of heb je vragen over hoe AI Agents iets voor jullie kunnen betekenen, neem gerust contact op!

Contributors

Authors

/

Jens Krijgsman, Automation & AI researcher, Teamlead

Want to know more about our team?

Visit the team page