Een Blueprint voor AI Agent Infrastructure

/

Een Blueprint voor AI Agent Infrastructure

MCP, A2A, Agent, Tool,… De lijst van het LLM-jargon en acroniemen blijft aan een snelheid toenemen. Nu wat zijn deze protocollen, services en applicaties? Waar horen ze thuis bij het bouwen van een AI Agent infrastructuur? In dit artikel bekijken we nog eens de huidige stand van zaken en lijsten we op wat er nodig is om zo een ‘Agent’ Infrastructuur op te zetten.

Dit is in kader met het onderzoek binnen het Art-IE project waarbij we ‘proof of concept’ LLM oplossingen creëren voor onder andere de verzekering wereld. Met deze blogpost proberen we een breed beeld te scheppen wat er nodig is bij het bouwen van een AI Agent systeem en welke componenten hierbij een rol spelen.

Main section

Kerngegevens

/

Tools zijn functies voor een LLM

/

12 Factor Agent voor Agent structuur

/

Protocollen verbinden Agents

Het bouwen van een AI Agent Infrastructuur

AI Agents kunnen heel wat zaken automatiseren. Zo kunnen ze van kleine banale taken tot eventueel al junior level taken gaan ‘automatiseren’. Dit natuurlijk gezien een goed overzicht en beheer van de Agents zelf.

Er zijn direct heel wat problemen die naar boven komen. Iedereen maakt specifieke agents die een specifiek doel hebben.

Een eerste probleem bij deze agent is hoe ze aan data komen, iedere specifieke agent heeft nood aan bepaalde kennis en data. En niet alle kennis kan zomaar meegegeven worden door limieten in de context (lengte van bericht) van taalmodellen (LLM)

Het verbinden van deze Agents is een volgend probleem, dit zeker als je met verschillende platformen gaat werken.

Indien je dan ook nog graag een ‘self hosted’ oplossing zou opstellen is ook het ‘messaging’ en het ‘real-time computing’ als snel een hele roadblock.

De vraag is dus, hoe kan je starten met een Agent Infrastructure en is er een handleiding?

Is er een handleiding?

Kort antwoord, ja! Lang antwoord: hangt ervan af. Elk tech bedrijf is zo snel mogelijk op de LLM trein gesprongen. Overal zijn er verschillende custom oplossingen hoe ze het hebben aangepakt. Voorkeuren van platformen, manieren van werken enz.

Voor web app of SaaS applicaties is er een gekende methodiek : https://www.12factor.net/ de 12 factor app, die een opsomming maakt over hoe je het beste start met het bouwen van een SaaS applicatie. Nu is er ook een initiatief dat dit voor LLM’s of specifieker Agents aan het oplijsten is : 12-factor-agents. Het is een vrij nieuw initiatief dat nog actief in ontwikkeling is al zou ik wel al kort de ‘blueprint’ die erin word voorgesteld gebruiken om een goeie Agent workflow aan te tonen.

Een stap terug, hoe een ‘standaard applicatie flow’ eruit ziet

Als sinds Decennia wordt software als een soort graaf voorgesteld. Dit toont de verschillende paden aan en zorgt er ook voor dat alles kan gecheckt worden.

Een eerste voorbeeld hiervan is in Automata, het bekendste stuk software dat dit als achterliggende structuur gebruikt is de welgekende Turing Machine. Een iets meer recentere structuren waarbij de ‘graafstrcutuur’ zichtbaar is, is in Directed graphs (DG) of Directed Acyclic Graphs (DAG). In de laatste jaren werden er reeds een toevoegingen gedaan van Machine Learning (ML) oplossingen in deze DAG structuur.

Nu komen we met LLM’s en Agents op een moment waar deze duidelijk gedefinieerde grafen wegvagen. Dit mogelijk gemaakt door de capaciteit van LLM’s om zelf te beslissen welk pad of graaf het beste genomen wordt in de software. Dit brengt ons dan bij de 12 Factor Agent

The 12 Factor Agent

In dit stuk een kleinere deepdive in de 12 factoren en wat deze willen zeggen. Indien je er meer over wil lezen kan je alles terugvinden op 12-factor-agents (hierop zijn ook nog visualisatie zichtbaar rond iedere stap)

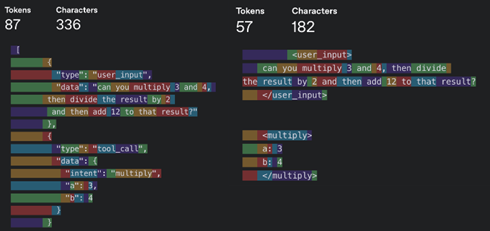

- Factor 1: Natuurlijke taal naar Tool Calls (Functieaanroepen)

- Uitleg: De agent vertaalt instructies of doelen uit natuurlijke taal naar concrete, uitvoerbare acties of functieaanroepen naar tools of systemen. Dit helpt de connectie tussen wat de gebruiker wil bereiken en hoe de agent het uitvoert.

- Factor 2: Controleer je prompts

- Uitleg: De instructies en context die aan de agent worden gegeven (de prompts) worden behandeld als configureerbare en beheerbare assets, vergelijkbaar met code of configuratiebestanden. Het belangrijkste hier is dat in je Agent system je ook de prompts goed opbouwt.

- Factor 3: Controleer je contextvenster (Context-window)

- Uitleg: Je context window bestaat uit, Rag, Prompt engineering, History/state en Memory. Ga hier goed mee omweg! Een verschil van opslag formaat kan een groot verschil hebben op het aantal tokens!

- Factor 4: Tools zijn slechts gestructureerde outputs

- Uitleg: Tools hoeven niet complex te zijn. Ze zijn enkel een gestructureerde output van de LLM die dan klassieke deterministische code triggered.

- Factor 5: Combineer Execution state en Business state

- Uitleg: De execution state (huidige stap, volgende stap, status) en Business state (Wat de agent heeft uitgevoerd in de workflow) worden best zo simpel mogelijk voorgestel om onnodige abstractie en complexiteit te voorkomen.

- Factor 6: Starten/Pauzeren/Hervatten met eenvoudige API's

- Uitleg: Agents bieden duidelijke en eenvoudige interfaces (API's) .Ze moeten gemakkelijk gestart, gepauzeerd of hervat worden door externe systemen.

- Factor 7: Mensen connecteren met tool calls (functieaanroepen)

- Uitleg: LLM Api’s maken gebruik van een specifieke Tokens, afhankelijk van de nood voor ‘plain tekst’ of gestructureerde data moet je ervoor zorgen dat alles duidelijk is voor de tool als voor de mens.

- Factor 8: Bezit je controleflow

- Uitleg: De logica die bepaalt hoe de agent beslissingen neemt en welke stappen het zet (de controleflow) is te controleren, neem ownership van samenvattende, combinerende en andere acties in de flow en bekijk deze goed.

- Factor 9: Compacte Fout notatie in Contextvenster

- Uitleg: Tools/ Agents hebben de capacitiet om bepaalde errors op te lossen (Self-healing), is er toch na 3 keer een error escaleer de fout naar een mens (via model keuze of deterministisch). Plaats steeds slechts een korte error message in de context.

- Factor 10: Kleine, Gerichte Agents

- Uitleg: In plaats van grote monolithische agents te maken, start klein met de focus op 1 taak. Je kan dan nog steeds verschillende kleine Agents gaan koppelen of in andere code inzetten.

- Factor 11: Overal activeren, gebruikers ontmoeten waar ze zijn

- Uitleg: Deze Factor is implementeerbaar indien factor 6 en 7 zijn voldaan. Het is eigenlijk de volgende stap om mensen nu vanuit verschillende platformen toegang te geven tot hun Agent workflows.

- Factor 12: Maak van je agent een “stateless reducer”

- Uitleg: Een agent neemt de huidige staat en input en produceert de volgende actie en een bijgewerkte staat, zonder zelf interne staat vast te houden tussen aanroepen.

Nu weten we hoe we best aan de slag gaan met Agents al komt nu de vraag rond standaardisatie. We willen een systeem waarop iedere LLM kan inpluggen hier komen dan al die nieuwe protocollen naar boven

Wat van al die protocollen

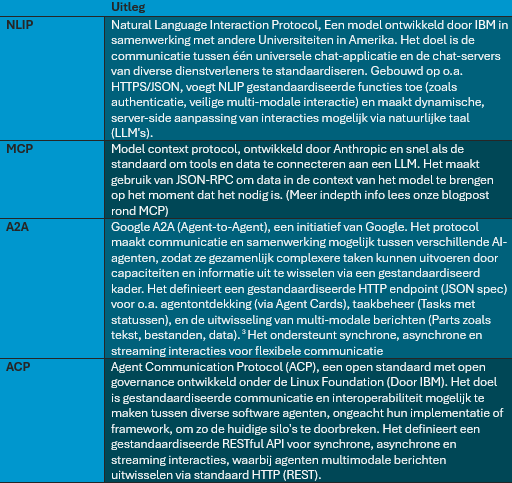

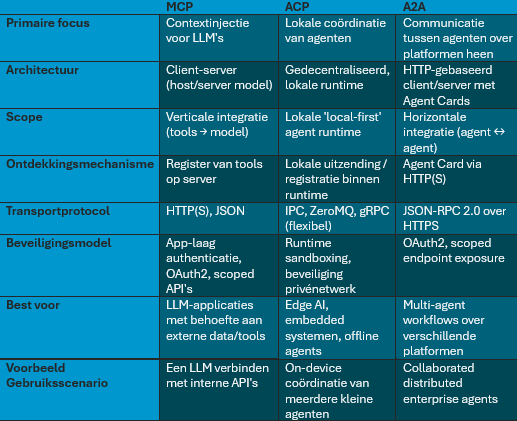

We weten nu hoe we onze Agents kunnen opbouwen, maar hoe gaan we ze gaan verbinden met de context, tools en andere Agents. Er zijn heel wat nieuwe protocollen die hierbij worden gecreëerd. Hierbij een korte vergelijking tussen de recente en meest bekende protocollen of standaarden

TLDR: Verschillen en Toepassingen

- NLIP: Standaardiseert chat-communicatie tussen een gebruiker en diensten/bedrijven (zoals banken, OV) om de nood aan losse apps te vervangen.

- MCP: Standaardiseert de verbinding tussen een LLM en tools/data zodat het model externe informatie kan gebruiken of acties kan uitvoeren.

- A2A: Standaardiseert communicatie tussen onafhankelijke AI-agenten over verschillende platformen of vendors heen voor collaboratieve taken. Geschikt voor gedistribueerde enterprise omgevingen en multi-agent workflows.

- ACP: Standaardiseert lokale coördinatie van agenten, vaak op hetzelfde apparaat of binnen een lokaal netwerk. Geschikt voor Edge AI, embedded systemen en offline agents.

En nu dan wat tabellen om wat dieper op de protocollen in te gaan.

Aangezien NLIP eerder een voorloper is van ACP hou ik deze even uit de vergelijking en focussen we on op de 3 grootste.

Hiermee hebben we een uitleg en een vergelijking tussen de bestaande protocollen en kunnen we verder gaan met een echte Agentic applicatie of oplossing te ontwikkelen.

Een voorbeeld architectuur

Nu we een soort ‘holistisch’ beeld hebben door de 12 factor Agent en de kennis hebben van de protocollen die Agents laten communiceren met data en elkaar kunnen we starten met een volledige Agent flow op te bouwen.

Deze bestaat uit de volgende 4 ‘layers’:

- Protocols: om het Wat te definiëren

- Frameworks: om het Hoe te definiëren.

- Messaging infrastructure: om de Flow te verzorgen.

- Real-time computation: om de denk capaciteit te verzorgen.

In deze blogpost hebben we reeds gekeken naar de holistische aanpak van de 12 factor agent en vervolgens kort rond de protocollen. De volgende 3 layers :Frameworks ( Crew AI, ADK – Agent development Kit google, langGraph, Haystack) ,Messaging Infrastructure (Kafka, RabbitMQ, Redis Streams) en Real-time computation (Apache Flink, Apache Spark, Materialize)

Deze layers kunnen door verschillende tools worden ingenomen. Afhankelijk in welk stadium de applicatie is POC of productie zullen er steeds specifiekere tools beschikbaar zijn. Momenteel zijn we nog verschillende tools aan het uittesten en zullen we deze andere layers nog in toekomstige blogpost behandelen.

Sources

https://github.com/humanlayer/12-factor-agents

https://thenewstack.io/a2a-mcp-kafka-and-flink-the-new-stack-for-ai-agents/

https://github.com/boundaryml/baml

https://github.com/nlip-project/documents/blob/main/NLIP_Specification.pdf

https://en.wikipedia.org/wiki/Deterministic_acyclic_finite_state_automaton

https://modelcontextprotocol.io/introduction

https://agentcommunicationprotocol.dev/introduction/welcome

https://www.promptingguide.ai/techniques

https://www.anthropic.com/engineering/building-effective-agents

https://www.12factor.net/

https://medium.com/@elisowski/what-every-ai-engineer-should-know-about-a2a-mcp-acp-8335a210a742

https://google.github.io/A2A/

Bottom section

De volgende stap

Nu heb je hopelijk wat meer opgestoken van hoe het beste een Agent op te bouwen en heb je ook een beeld waar onderzoeksgroepen of andere consultants jou kunnen bijstaan.

Wij van het AI Lab volgen alles rond LLM’s en Agents verder op, dit zowel binnen het Art-IE project alsook binnen andere subsidie projecten (o.a. O&O en COOCK+ ). Zitten jullie dus met een vraag rond agents en willen jullie graag een onderzoeksproject willen doen, aarzel niet om contact op te nemen.

Contributors

Authors

/

Jens Krijgsman, Automation & AI researcher, Teamlead

Want to know more about our team?

Visit the team page