MCP, een "nieuwe" AI-integratiestandaard

/

MCP, een "nieuwe" AI-integratiestandaard

Volgend op de post van vorige week over RAG, in .Het Toegepaste AI LabvanArt-IE,we volgen ook de trends om te zien wat nieuw is en wat kan worden geïmplementeerd in onze huidige en toekomstige projecten. In de afgelopen weken heeft het Model Context Protocol (MCP) het internet stormachtig veroverd. Dit, hoewel het relatief gezien niet zo 'nieuw' is, gezien de snelheid van LLM-oplossingen.

Main section

MCP

De onderliggende structuur van MCP

Model Context-protocol of MCP is een open standaard voor de verbinding van 'AI-assistenten' met de systemen waar de gegevens zich bevinden. Het protocol is ontwikkeld door Anthropic met één eenvoudig doel voor ogen: een universele manier te hebben om LLM's met hun gegevens te verbinden.

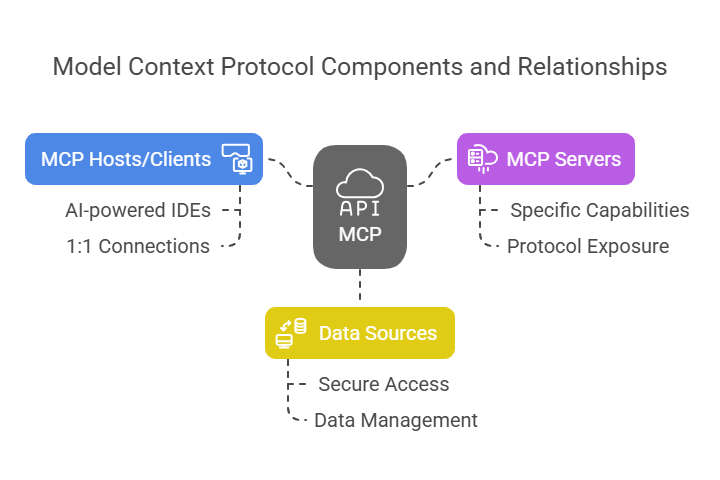

In wezen volgt MCP een client-serverarchitectuur die gestandaardiseerde communicatiekanalen tussen AI-toepassingen en gegevensbronnen tot stand brengt. Het protocol bestaat uit primaire componenten:

- MCP Hosts/ClientsToepassingen zoals Claude Desktop of AI-gestuurde IDE's die 1:1 verbindingen met servers onderhouden

- MCP-serversLichte programma's die specifieke mogelijkheden blootstellen via het protocol

- GegevensbronnenLokale of externe systemen die MCP-servers veilig kunnen benaderen

Deze architectuur transformeert de traditionele uitdaging van het integreren van meerdere AI-modellen (M) met verschillende gegevensbronnen (N) van een M×N probleem naar een beter beheersbare M+N oplossing. In plaats van aangepaste verbindingen te creëren tussen elk model en elke gegevensbron, kunnen ontwikkelaars de MCP-standaard eenmaal implementeren en universele connectiviteit bereiken.

Kijkend naar de onderliggende technologieën en het ontwerppatroon van hoe MCP is gemaakt, dat lijkt op een fabrieks- of adapterpatroon, zou je kunnen beginnen met je af te vragen of dit zelfs zo 'revolutionair' of hype-waardig is.

Wacht, dat heb ik eerder gezien.

Inderdaad, wanneer je naar de oplossing kijkt, lijkt het niet zo complex of baanbrekend. Het is in wezen een manier om je gegevens te verbinden met een LLM en mogelijk Agents of Tools eraan toe te voegen.

Om deze gegevens te verzenden, maakt MCP gebruik van JSON-RPC 2.0 voor berichtuitwisseling. JSON-RPC is een API (Application Programming Interface) protocol en is ontworpen voor hoge prestaties. Er zijn enkele aandachtspunten, zoals hoge koppeling aan de service-applicatie, en het later wijzigen van de service-implementatie heeft een grote kans om de clients te breken.

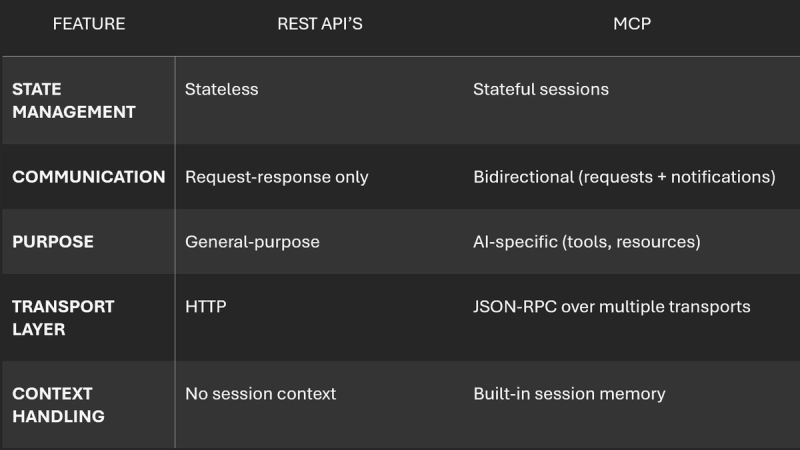

Bij het lezen over JSON-RPC wordt REST snel genoemd als een mogelijke concurrent, hoewel het meer een 'state transfer' architectuur is. REST is goed bekend voor datatransfer en API-aanvragen. Je zou JSON-RPC kunnen zien als een soort minder acties, meer doorvoeroplossing ervan. In het geval van werken met LLM's en veel data is de keuze voor JSON-RPC duidelijk.

Vergelijking met REST API's, terwijl REST API's veel worden gebruikt voor communicatie tussen systemen, introduceert MCP verschillende belangrijke verschillen die zijn afgestemd op AI-toepassingen:

Dit verandert niets aan het feit dat een standaard die gebruikmaakt van bestaande, geteste protocollen en ontwerppatronen geweldig zou kunnen zijn om de ruimte verder te verbeteren en oplossingen meer 'modelafhankelijk' te maken, en zou kunnen helpen toekomstige vendor lock-in te voorkomen.

Waarom het (waarschijnlijk) een goede zaak is

Het hebben van een standaard en open communicatieprotocol is altijd een goede zaak. Kijk bijvoorbeeld naar hoe Apple iMessage blokkeert. Het is geweldig dat een standaard nu door alle grote aanbieders van LLM's wordt geïmplementeerd, zoals OpenAI of Anthropic. De adoptie van veel tools en platforms die het mogelijk maken om een client te hebben (VS-code, Cursor, …) of zelf een MCP-server te bedienen zoals Github is ook een stap vooruit.

We staan aan het begin van deze nieuwe standaard en er moet nog veel onderzoek worden gedaan naar de praktische integratie en wat de implicaties zijn.

Lijkt interessant, maar is het veilig?

Dus we weten nu dat MCP een protocol is om LLM met gegevens te verbinden, we weten dat het nuttig kan zijn, maar is het eigenlijk veilig? Gewoon al je gegevensbronnen naar een LLM doorgeven, wat zijn enkele problemen die zich kunnen voordoen?

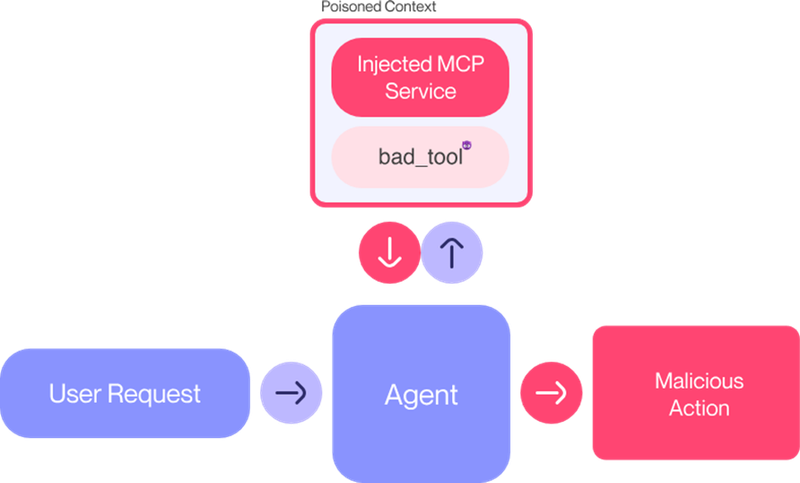

Een van deze problemen kan Tool Poisoning Attacks zijn, dit is wanneer een kwaadaardige server gevoelige gegevens van de gebruiker kan exfiltreren en het gedrag van de agent kan overnemen. Ondertussen ook instructies van andere, vertrouwde servers kan overschrijven.

Bron:https://invariantlabs.ai/blog/mcp-beveiligingsmelding-tool-besmettingsaanvallen

Als je geïnteresseerd bent, raad ik ten zeerste aan om het rapport van Invariant Labs erover te lezen, maar voor dit artikel wordt het slechts gebruikt als een voorbeeld van de gevaren van het gebruik van iets als MCP zonder na te denken over mogelijke problemen die zich kunnen voordoen.

Bij het werken met nieuwe protocollen en geavanceerde technologieën is het altijd belangrijk om mogelijke problemen in gedachten te houden en deze zo nauwkeurig mogelijk te volgen!

Bottom section

Om MCP te zijn of niet te zijn, dat is de vraag.

Dus begin je nu MCP te implementeren in je momenteel in ontwikkeling zijnde tools of platforms? Wat ons betreft bij deAI Lab Onderzoeks groepwe zijn bezig met de implementatie van MCP in onzeArt-ieoplossingen als een manier om de technologie te testen en mogelijk gebruik te maken van de toekomstige kracht die het kan hebben.

Het is duidelijk dat niet alle bibliotheken er klaar voor zijn, elke open source vector database of LLM-tool probeert het protocol snel te implementeren, waardoor sommige implementaties in een bèta-toestand verkeren.

Persoonlijk zou ik zeggen houd MCP in gedachten, als het mogelijk is begin dan met het implementeren van een ruwe versie, maar wacht tot de ruimte wat rijper is voordat je er met je hoofd in duikt.

Neem gerust contact op met ons Onderzoekslaboratorium voor vragen over MCP of andere LLM-gerelateerde onderwerpen.

Bronnen

- https://modelcontextprotocol.io/introduction

- https://www.digidop.com/blog/mcp-ai-revolution

- https://invariantlabs.ai/blog/mcp-beveiligingsmelding-tool-besmettingsaanvallen

- https://github.blog/changelog/2025-04-04-github-mcp-server-public-preview/

- https://openai.github.io/openai-agents-python/mcp/

- https://invariantlabs.ai/blog/mcp-beveiligingsmelding-tool-besmettingsaanvallen

- https://www.claudemcp.com/

- https://apidog.com/blog/mcp-vs-api/

Contributors

Authors

/

Jens Krijgsman, Automation & AI researcher, Teamlead

Want to know more about our team?

Visit the team page