Pipecat: De ruggengraat van spraakagenten

/

Pipecat: De ruggengraat van spraakagenten

In de afgelopen weken heeft ons onderzoeksteam verschillende artikelen gepubliceerd over trends binnen het snel evoluerende landschap van AI-taalmodellen. Maar hoe til je je AI-agenten echt naar een hoger niveau? Hoe zorg je ervoor dat gebruikers op een natuurlijke, menselijke manier met deze systemen kunnen communiceren zonder elke keer nieuwe prompts te hoeven typen?

De meest intuïtieve manier waarop mensen met elkaar communiceren blijft...gesproken dialoogWe vertalen dat principe naar AI-systemen in de vorm vanStemagenten,slimme spraakgestuurde AI's die met gebruikers kunnen converseren. Door een combinatie vanspraak-naar-tekst(STT) intekst-naar-spraakTekst-naar-spraak (TTS) technologieën stellen taalmodellen in staat om niet alleen menselijke spraak te begrijpen, maar ook op een overtuigende manier te reageren. Dit creëert een echte conversatie tussen mens en machine.

In deze blogpost willen we u graag voorstellen aanPipecateen open-source tool die als de ruggengraat voor dergelijke spraakagenten dient.

Main section

Pipecat

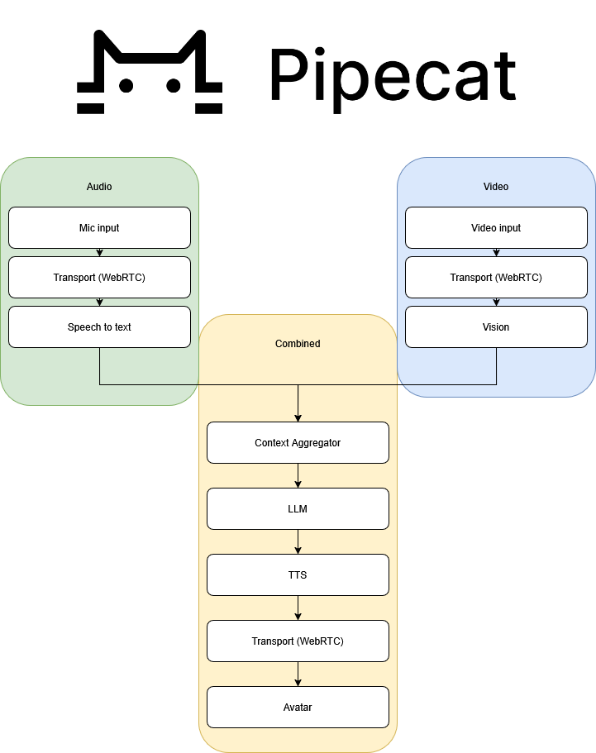

Pipecat is een open-source Python-framework dat de eenvoudige creatie van een complete mogelijk maakt.De term "pipeline" verwijst doorgaans naar een reeks stappen of fasen in een gegevensverwerkingsworkflow, vaak gebruikt in machine learning en data engineering contexten. Het kan de verzameling, verwerking en analyse van gegevens omvatten, evenals de implementatie van modellen.om in te stellen voor het bouwen van AI-gestuurde spraakagenten. Het framework verbindt verschillende AI-componenten, zoals taalmodellen, transcriptie en TTS, in een modulaire stroom.

Het idee achter Pipecat is eenvoudig maar krachtig:De beschikbare output van één model wordt onmiddellijk doorgegeven aan het volgende model in de keten, zelfs als de output nog niet compleet is.Dit zorgt voor minimale responstijd en een soepele interactie.

Een voorbeeld: taalmodellen genereren vaak hun antwoorden in stukken. In plaats van te wachten op het volledige antwoord, stuurt Pipecat elk ontvangen stuk direct naar het TTS-model, dat het stuk onmiddellijk hardop voorleest. Op deze manier werkt elk model in de pijplijn parallel, waardoor de algehele responstijd van uw Voice Agent verrassend laag blijft.

Hieronder vindt u een diagram dat een typische Pipecat-stroom visualiseert:

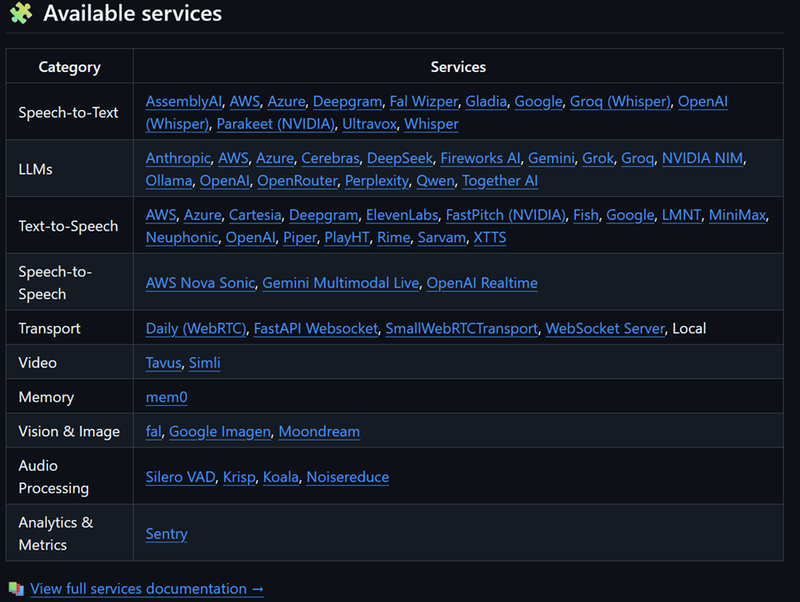

Pipecat-diensten: modulair en uitbreidbaar

Een van de sterke punten van Pipecat is demodulariteit van de dienstenNieuwe modellen integreren in uw bestaande pipeline? Geen probleem. Pipecat wordt continu bijgewerkt met nieuwe standaarddiensten die u in staat stellen eenvoudig verbinding te maken met de nieuwste AI-modellen.

Zelfs als je werkt met modellen of systemen die (nog) niet officieel worden ondersteund, kun je eenvoudig je eigen serviceklasse schrijven op basis van bestaande voorbeelden. Dankzij deze flexibiliteit hoef je je applicatie niet elke keer helemaal opnieuw te schrijven wanneer er een beter model beschikbaar komt. Dit is een cruciaal voordeel in een domein dat zo snel evolueert.

WebRTC

Om audio en video efficiënt te verwerken, gebruikt Pipecat verschillende transportlagen.

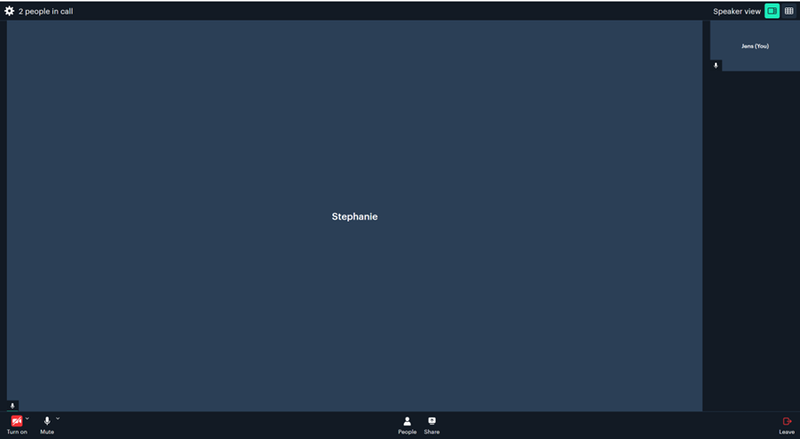

De meest robuuste en productieklare optie is nog steeds deDagelijkse WebRTC-transport, waaruit Pipecat oorspronkelijk is ontstaan (Pipecat is opgericht vanuit Daily.co). Dit maakt het mogelijk om spraakagenten rechtstreeks in videogesprekken te integreren.

Stel je voor: een virtuele vergaderruimte waar een AI-agent meeluistert, vragen beantwoordt, gespreksoefeningen begeleidt of als assistent optreedt. Dit is geen sciencefiction; het is vandaag de dag al mogelijk met Pipecat.

Ondertussen zijn er ook alternatieve manieren om de transportlaag voor tests in te stellen, maar voor productie doeleinden is de Daily transportlaag nog steeds de beste optie.

Bottom section

Toepassingen

Gespreks oefeningen

In trainingsprogramma's waar zachte vaardigheden en communicatieve vaardigheden centraal staan, bieden spraakagenten aanzienlijke toegevoegde waarde.

Traditioneel worden deze oefeningen in paren uitgevoerd, waarbij de effectiviteit grotendeels afhangt van de inzet en kwaliteit van je oefenpartner. Bovendien is het vaak moeilijk voor studenten of trainees om deze vaardigheden buiten de lesuren verder te oefenen.

Met het projectAvatalkWe reageren hierop. We ontwikkelen concrete gebruiksgevallen waarin gebruikers hun conversatievaardigheden op elk moment van de dag kunnen oefenen met een AI-spraakagent.

Door het taalmodel een uitgebreide prompt te geven, wijzen we een specifiekeDe term "persona" verwijst naar een karakter of identiteit die een gebruiker of een groep gebruikers vertegenwoordigt in de context van gebruikerservaringontwerp, marketing of softwareontwikkeling. Het wordt vaak gebruikt om de behoeften, gedragingen en doelen van gebruikers te begrijpen door gedetailleerde profielen te creëren die de kenmerken van doelgebruikers samenvatten. In AI en technologie helpen persona's bij het afstemmen van producten en diensten om beter te voldoen aan de verwachtingen en vereisten van gebruikers.aan de agent. Op deze manier ontvangt het AI-systeem alle noodzakelijke context om zijn rol in het gesprek op een geloofwaardige en educatieve manier te vervullen.

Klantenservice / Intakegesprekken

Ondersteuningsteams besteden vaak veel tijd aan het beantwoorden van eenvoudige, repetitieve vragen. Dit is een verspilling van hun expertise, die beter kan worden benut in complexere gevallen.

Spraakagenten bieden hier een directe oplossing:

- Eenvoudige vragenwordt automatisch beantwoord door het AI-systeem.

- Complexere vragenzal worden doorgestuurd naar een ondersteunend personeel lid, samen met een automatisch gegenereerd rapport over de klantvraag met behulp van MCP.

Indien nodig plant de agent een afspraak met een werknemer. Als iemand onmiddellijk beschikbaar is, kan hij zelfs direct deelnemen aan het gesprek.

Op deze manier verhoog je niet alleen de efficiëntie van je klantenservice, maar verbeter je ook de klantervaring: snel, professioneel en consistent.

Eindelijk

Pipecat is niet alleen een tool; het is een solide basis voor de toekomst van menselijke interactie met AI. Of je nu een spraakgestuurde assistent bouwt, een educatief conversatiesysteem ontwikkelt of je klantenservice automatiseert: met Pipecat leg je de juiste basis.

Nieuwsgierig? Neem contact op met AI Lab voor een demo of om de mogelijkheden voor een op maat gemaakte oplossing te bespreken.