'Vibe' Check: Navigeren door de Opkomst van AI-gestuurde Coderingstools en Platforms

/

'Vibe' Check: Navigeren door de Opkomst van AI-gestuurde Coderingstools en Platforms

Dit artikel is geschreven in april 2025, vanwege de snelle ontwikkeling van de genoemde code-editors kunnen de genoemde functies op een andere manier werken dan beschreven.

Voor het AI Upd8-project volgen we trends in het landschap van AI-tools. AI-code-editors of zelfs complete AI-gebaseerde applicatieontwikkelingsplatforms duiken overal op. Er is zelfs een nieuwe term 'Vibe Coding', wat in wezen betekent dat je codeert op basis van 'vibe', dus gewoon het AI-systeem aansteken en suggesties voor wijzigingen doen zonder dieper op de code in te gaan.

In deze blogpost zal ik de tools bekijken, proberen ze waar mogelijk te vergelijken en ook de vraag aansteken hoe deze tools gebruikt zouden moeten worden, waar problemen zich voordoen. Dit komt ook voort uit wat gezien wordt in het onderwijs en bij jonge programmeurs (en zelfs in mijn eigen gedrag).

Main section

De Gereedschappen

Beginnen met de tools. In het algemeen zijn er 2 hoofd categorieën die waargenomen kunnen worden.

- AI-code-editors

- AI-ontwikkelingsplatforms

Ter vergelijking heb ik geprobeerd om, en je zou het niet raden, een Todo-app in al deze te maken. Er zijn nooit genoeg todo-apps.

Er zijn een aantal dingen die ik van tevoren duidelijk wil maken. Ik ben een full stack ontwikkelaar met 5 jaar ervaring, mijn focus ligt meer op de implementatie en backend van POC (proof of concepts), maar ik heb verschillende varianten van JS-frameworks gebruikt in eerdere projecten. Ik ben geen autoriteit op het gebied van Prompting, aangezien ik werk voor een onderzoeksafdeling van een universiteit waar de meeste van onze projecten tot de POC-fase zijn.

Wat de gemaakte app zou moeten bevatten, heb ik enkele basislijnen opgesteld.

- Eenvoudige minimalistische frontend applicatie

- Weergeven van datum, tijd en weer

- Alleen taken voor vandaag weergeven

- Plaats oudere taken in archief en verwijder ze na een week.

- Voor code-editors een aparte FAST API

AI-code-editors

De eerste zijn deAI-code-editorsDit zijn verbeterde programmeeromgevingen waar AI-modellen helpen bij bepaalde taken. Ze zijn voornamelijk gericht op ontwikkelaars die de nieuwe mogelijkheden van grote taalmodellen willen benutten. Tussen hen zijn er nog enkele verschillen; sommige editors hebben agentische benaderingen, terwijl andere alleen een geavanceerde autocompleter hebben.

In de afgelopen maanden hebben al deze editors vergelijkbare functionaliteiten geïmplementeerd. Dit maakt het vergelijken ervan echt een meer 'persoonlijke' zaak. Een code-editor kan een bot gereedschap zijn, maar kan ook worden aangescherpt door te kijken naar alle sneltoetsen en geavanceerde functionaliteiten die ze bieden. Door tijd te besteden en deze naar jouw voorkeur te verbeteren, kun je je omgang met allemaal zeker verbeteren.

Voor deze blogpost heb ik de volgende 3 AI-editors gekozen. Ik weet zeker dat er andere meer niche en nieuwere code-editors zijn zoals Zed of een of andere plugin op Vim of de JetBrains-suite. Ik heb deze 3 geselecteerd omdat ze allemaal gebaseerd zijn op dezelfde gebruikersinterface.

- Github Copilot

- Cursor

- Windsurfen

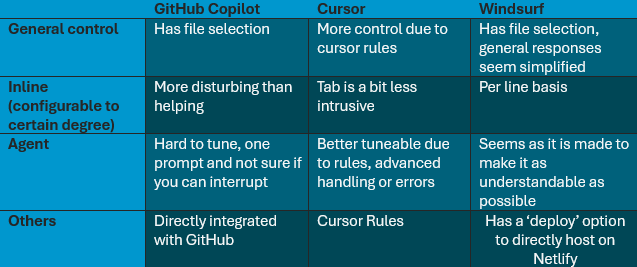

Voor mensen die niet geïnteresseerd zijn in het hele proces, hier is de TL;DR uit mijn persoonlijke ervaring.

Mijn algemene benadering met hen allemaal is om tebegin met de agentische benadering/ help met het opzetten van het project volgens mijn specificaties*volgende stap kijk ik door de code, reorganiseer waar nodig en test lokaal.Volgende wil ik enkele tests genereren.om te zien hoe de aangepaste agenten dit afhandelen. En als laatste testIk zou een tekstveld wijzigen zodat het ook een andere maximale lengte kan hebben.dit zou ook in de test moeten worden gecontroleerd.Als laatste stap wil ik mijn applicaties containeriseren voor gemakkelijke implementatie.hier zal ik controleren of het de juiste referenties gebruikt en hoe de algemene context van de codebase wordt beschouwd.

Zoals de meesten weten, maakt het model dat wordt gebruikt ook een groot verschil. Het feit is dat dit alles waarschijnlijk zal verbeteren met nieuwere en betere versies. Voor uniformiteit gebruikten ze allemaalClaude 3.7 sonnet.

Ze hebben allemaal de mogelijkheid om aangepaste MCP-servers te hebben (Als je hier meer over wilt weten, lees dan ons vorige artikel erover), maar deze zullen niet worden gebruikt.https://ailab.howest.be/news/mcp

Je kunt kiezen voor een Low AI-workflow of een High AI-workflow. Voor dit artikel heb ik gekozen voor een High AI-workflow om echt te ervaren hoe deze workflow aanvoelt en wat ermee bereikt kan worden. Ik zal één proces volledig doorlopen en voor de anderen zal ik de opmerkelijke verschillen aangeven. Dit om je te besparen alles opnieuw te lezen.

Github Copilot

Voor transparantie, GitHub Copilot is de go-to AI-assistent die ik persoonlijk gebruik. Waarom? Simpel,het komt in het GitHub-onderwijs pakket, het is rechtstreeks geïmplementeerd in mijn huidige Vscode-omgeving en ik kan het gebruiken als een ChatGPT-venster in mijn editor. Het kan natuurlijk ook meer!

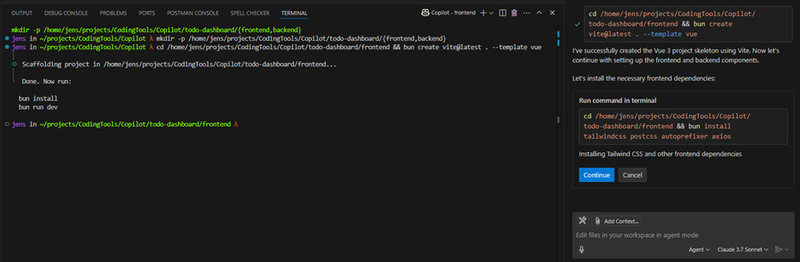

Begin met het gebruik van deagentin copilot om het project te starten met de projectprompt.

Door de agentbenadering te gebruiken, kun je de stappen volgen en zien wat de AI uitvoert. Dit proces is niet perfect. Als je het niet verifieert, is het onvermijdelijk dat er fouten optreden. De agent zal dit detecteren en proberen het op te lossen. Houd er rekening mee dat dit alles ook veel complexer kan maken als je niet verifieert wat het doet. Het doel van het gebruik van een code-editor is om dit te behouden.controleer je code.

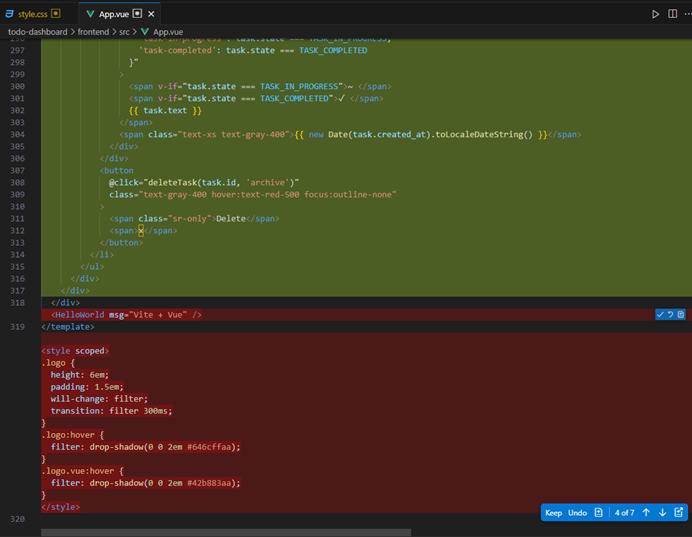

Vervolgens kun je de wijzigingen die Copilot heeft aangebracht bekijken als een Git Diff, of een changelog van de vorige bestanden.

Wanneer de copilot-agent een tijdje heeft geïtereerd, zal hij dat aangeven. Je kunt dan verder specificeren wat je wilt of het gewoon laten doorgaan.

Bij het maken van de backend had ik opgemerkt dat er een .venv en een requirements.txt werd aangemaakt. Dit is niet echt nodig bij het gebruik van een pakketbeheerder zoals UV (dit is misschien een beetje technisch, maar het toont aan dat de agent niet feilloos is). Dit te vermelden voordat je verdergaat, zorgt ervoor dat de agent zijn reactie verandert.

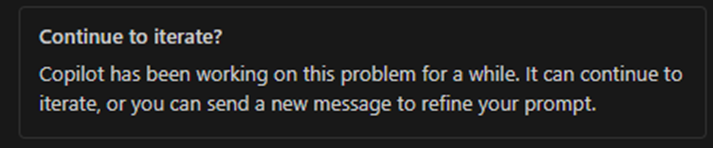

Hier kunnen we zien waar extra context van een MCP-server handig kan zijn. Kijkend naar de UV-documentatie staat het volgende om te beginnen met eenproject:

Deze manier van werken wordt niet gevolgd door de Agent. Nu is de vraag, blijven we dit aan de agent geven of beginnen we het over te nemen. Voor nu heb ik deze kennis gewoon geplakt en beoordeeld wat hij zei, nu leek het alsof hij op de goede weg was.Nu was er een extra probleem => UV was nog niet geïnstalleerd in mijn basis Python-interpreter. De Agent, die de opdracht om de omgeving te creëren zag, faalde. Vervolgens ging hij verder met het maken van alle bestanden en stelde vast‘Het lijkt erop dat de UV-opdracht niet beschikbaar is in de huidige omgeving. Laten we doorgaan met het rechtstreeks aanmaken van de backendbestanden en later instructies geven over hoe de benodigde afhankelijkheden te installeren.’Dit gedrag was niet door mij ingesteld. Als ik iets anders zou willen, zou dit in de hoofdprompt moeten worden geschreven. Dit kan echt vervelend worden. Om dit op te lossen, heb ik het pakket handmatig op de hoofdinterpreter geïnstalleerd.

Er zijn al enkele problemen in de code ontstaan. Bijvoorbeeld het gebruik van verouderde methoden. Dit heb ik handmatig gewijzigd.

Andere problemen, zoals de documentatie die niet overeenkomt met hoe het behandeld moet worden, moeten ook worden gewijzigd. Deze kleine fouten stapelen zich snel op, waardoor het creëren van de applicatie op een bepaalde manier complexer wordt.

De aangemaakte venv-map was ook niet nodig, aangezien UV het installeert in een .venv-map.

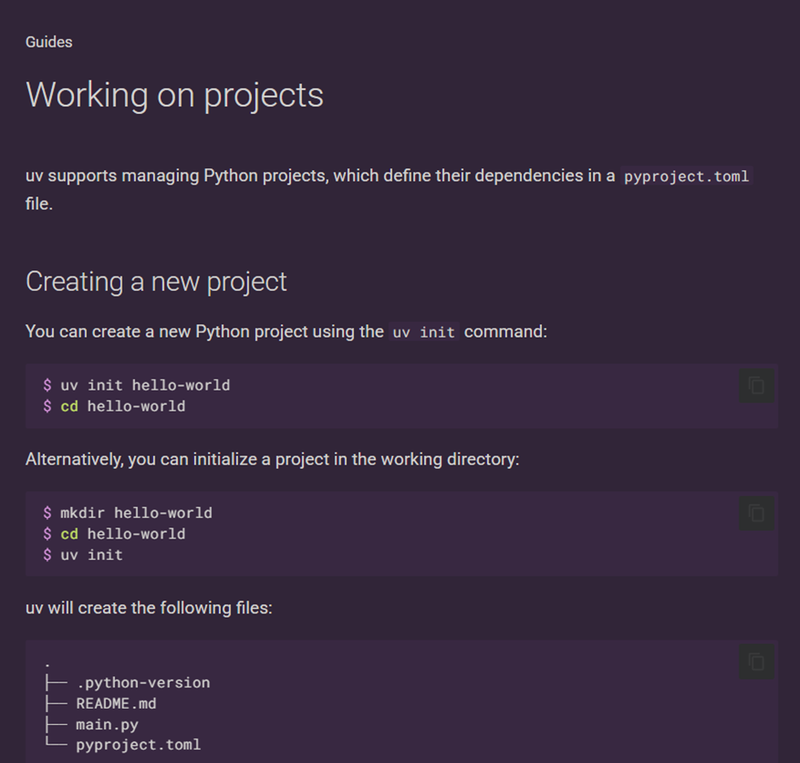

Nu was de volgende stap het opzetten van tests voor de backend. Dit werd gedaan door de benodigde context te selecteren en vervolgens te stellen dat tests voor de backend nodig zijn. Het creëerde eerst de mappen en begon daarna met het toevoegen van tests.

Persoonlijk moedig ik het gebruik van AI voor het schrijven van tests niet echt aan, het kan je laten vervallen in een soort 'ziet er goed uit, waarschijnlijk prima' mentaliteit. Het doel van tests is om je applicatie echt te testen op bepaalde logica. Het laten maken van deze code door AI kan zelfs het doel ondermijnen als het niet goed wordt gecontroleerd.

Bij het uitvoeren van de tests zijn ze allemaal geslaagd met enkele waarschuwingen vanwege verouderingsberichten. Ik heb deze handmatig gewijzigd.

Nu de backend is ingesteld, kunnen we opnieuw naar de frontend kijken. Van het eerste Agent-verzoek hadden we al wat frontend-code, deze zal waarschijnlijk moeten veranderen vanwege de backend die er nu is.

Opnieuw wordt de context geselecteerd die ik waardevol achtte voor de vraag over het verbinden van de backend met de frontend, samen met de prompt voor de agent. Het begon toen de frontend te veranderen. Bij het installeren van nieuwe pakketten deden zich dezelfde problemen voor als bij UV. Voor de frontend met Bun. De AI-modellen willen echt de basis Npm- of pip-modules gebruiken. Dit komt door de enorme hoeveelheid gegevens waarop ze zijn getraind; in deze dataset zou slechts een klein percentage met deze meer 'niche' pakketbeheerders zijn. Het werkt beter wanneer er meer prompts worden gegeven, maar het is iets om in de gaten te houden.

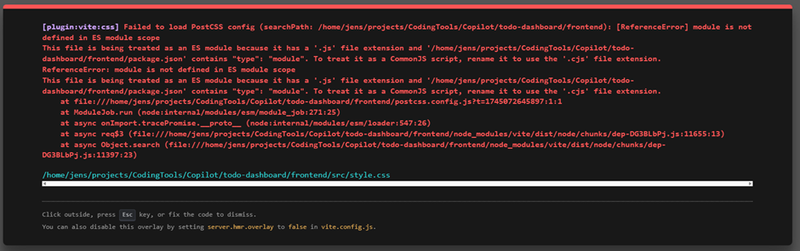

Dan het uitvoeren van de frontend en … Fout

Om volledig eerlijk te zijn. Het was een fout die ik vermoedde. Nogmaals, iets vragen zoals tailwind maakt het moeilijker voor het model. Het wordt gebruikt om te werken met normale CSS-styling; de tailwind-configuratie is iets dieper, en dit in combinatie met bun maakt het ook een puzzel.

Nadat ik het handmatig aan de praat had gekregen, vroeg ik het model om alle gemaakte CSS te verwijderen en de frontend echt Tailwind te maken.

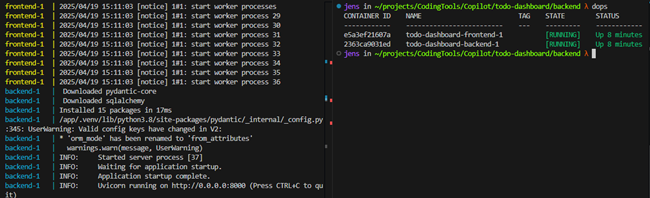

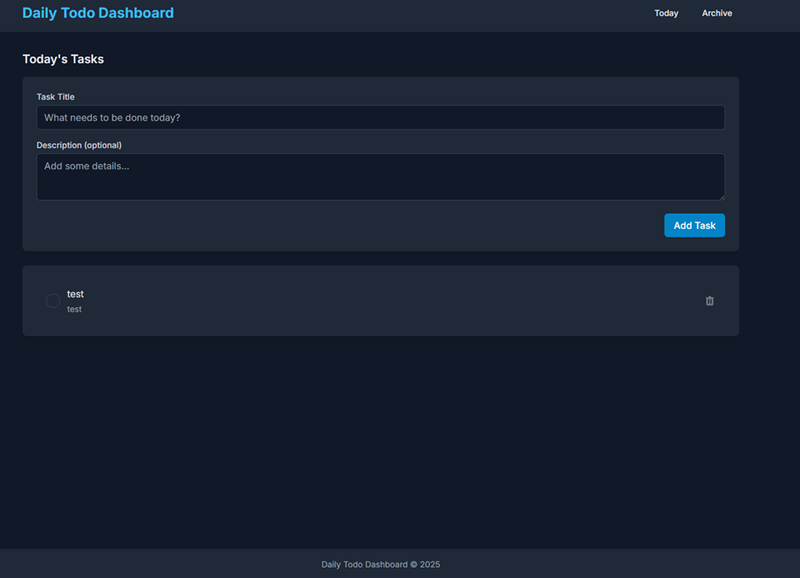

Na ongeveer een minuut wachten was het resultaat klaar om bekeken te worden. De website en backend waren operationeel (na ze beide handmatig te hebben uitgevoerd).

Nu is de laatste stap gewoon het hele repository nemen en proberen te zien of alle context van bun, tot uv en routing in overweging is genomen bij het maken van dockerfiles en dockercompose om deze apps te containeriseren. Deze containerisatie is een manier om applicaties te bundelen, zodat als het op mijn machine werkt, het ook op de machines van anderen werkt. We zullen waarschijnlijk een meer diepgaande uitleg schrijven van wat het is en wat het kan doen. Als je geïnteresseerd bent, laat het ons weten!

Het uitvoeren van deze stap blootstelde nog meer fouten die optraden bij het opzetten van de UV-omgeving. Dit werd vervolgens handmatig opgelost.

Naast deze kleine fout deed het het eigenlijk best goed, door een goed netwerk te creëren, de diensten te verbinden en de code opnieuw te schrijven zodat alles containerized werkt.

Dit sluit dan de creatie van een Containerized To Do-app met behulp van GitHub Copilot af. Het duurde ongeveer 1 tot 2 uur, inclusief het documenteren van de stappen en het handmatig oplossen van enkele problemen. Al met al is het niet slecht om iets zo snel mogelijk operationeel te hebben.

Nu voor de codebase. Deze is over het algemeen redelijk leesbaar en beheersbaar. Dit is ook deels te danken aan de eenvoudige aard van de applicatie. De backend is mooi compartmentalized in aparte functionaliteiten. Aan de andere kant is de frontend niet zo geweldig, alles is samengevoegd in één App.vue-bestand. Dit maakt het tegelijkertijd gemakkelijker en moeilijker. Het komt misschien neer op voorkeur, maar ik had graag wat meer componenten gezien. Dit zou dan handmatig moeten worden gemaakt door een architectuurdocument of een schema te hebben over hoe de applicatie van tevoren te bouwen of door... te viberen of het in de prompt toe te voegen.

Er zijn ook dingen die niet zijn bekeken, bijvoorbeeld de beveiliging van alles, het toevoegen van bearer tokens of JWT. Dit is iets dat ik zou aanraden zelf af te handelen en ervoor te zorgen dat het correct is. Als je AI gebruikt om je werk sneller te maken, neem dan de tijd om ervoor te zorgen dat je werk ookBeteren vergeet niet je eigen vaardigheden te gebruiken.

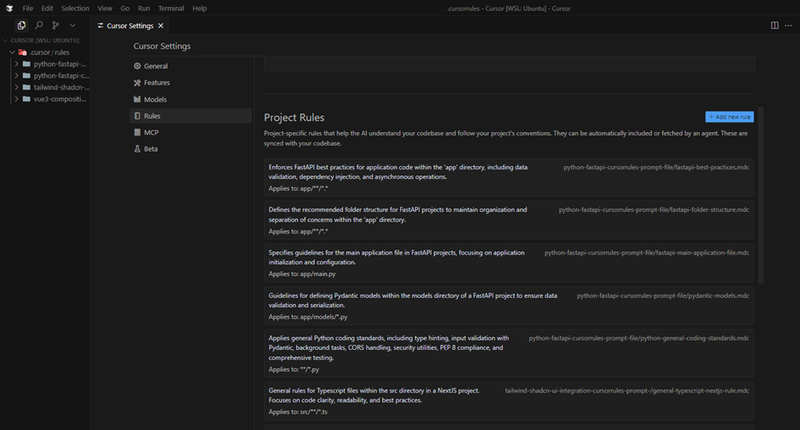

Cursor

Ga naar de cursor om dezelfde applicatie te maken waarmee ik eerst begon, en kijk wat beter naar de IDE. Bijvoorbeeld, cursor heeft zijn eigen .cursor/rules map om je cursorregels en andere instellingen in te plaatsen.

Aangezien de andere tools deze functionaliteit niet hebben, wilde ik dit uitproberen. Dus wat deed ik? Ik zocht naar regelsbestanden die interessant zijn voor Python FastAPI, Tailwind en andere bibliotheken die ik van plan was te gebruiken. Je kunt de regels dan noemen zoals je een bestand noemt of gewoon de Agent laten selecteren wat hij nodig heeft op basis van de beschrijving.

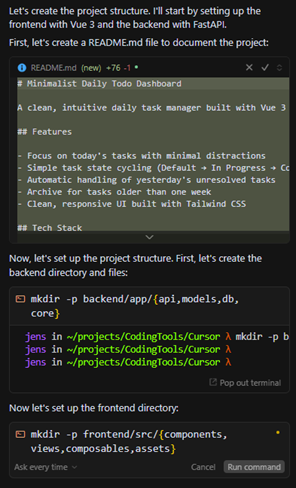

Na het instellen van de regels gaf ik de Agent dezelfde prompt.

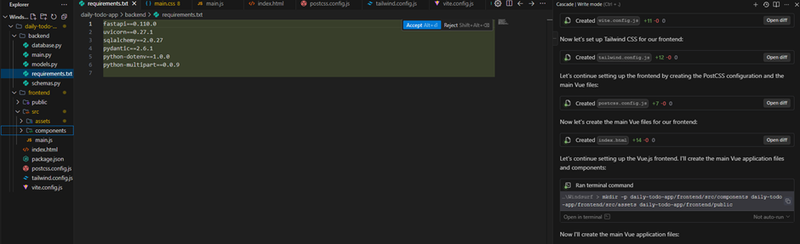

Een verschil vanaf het begin was het 'run command' dat in het agent-tabblad werd uitgevoerd, dit maakte het meer ingekapseld in het agentproces in plaats van naar het hoofdterminalvenster te springen. Het begon ook met de backend en niet de frontend (dit kan het model zijn, maar ik neem aan dat de extra regels er ook iets mee te maken hebben).

Er waren ook weer dezelfde problemen met het niet gebruiken van UV manager voor Python en het standaard gebruik van pip met het requirements.txt-bestand.

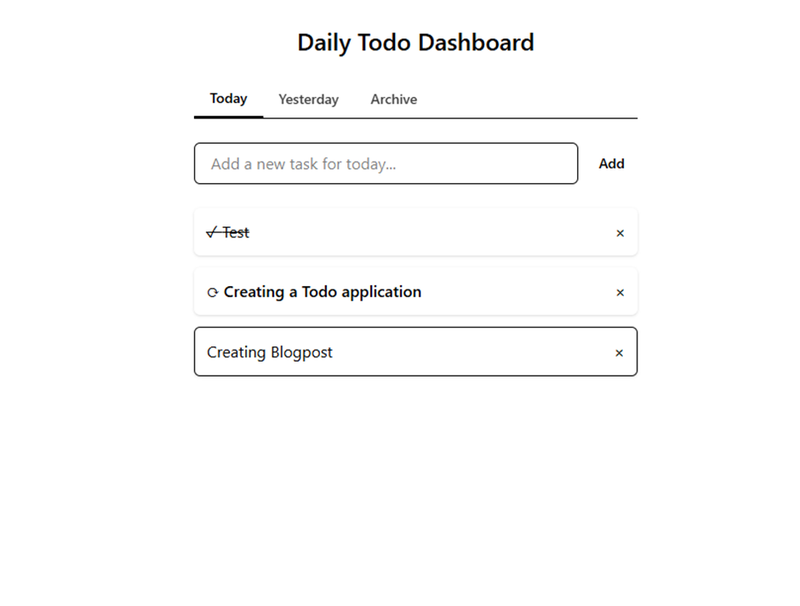

Door de regels was de algemene structuur ook veel beter! De frontend is opgesplitst in componenten en volgt de vue3-regels.

Er waren enkele andere functionaliteitsproblemen, maar ik zal deze afschrijven als een LLM-probleem en niet als een cursorprobleem.

Bij het maken van de containers had het dezelfde problemen. Dit zelfs met de bestaande cursorregels. Ik zou waarschijnlijk een nieuwe moeten maken die specifiek is voor docker en de gebruikte tools en bibliotheken. Dit is iets dat interessant kan zijn voor bedrijven die met een consistente codebase werken, voor mijn gebruiksgeval heb ik de wijzigingen gewoon handmatig aangebracht.

Resultaat:

Windsurfen

Nu de laatste van de tools, Windsurf. Er wordt gezegd dat windsurf een soort meer gepolijste versie is. Waar copilot neutraal is, is Cursor voor de denkers en Windsurf meer voor de vibes.

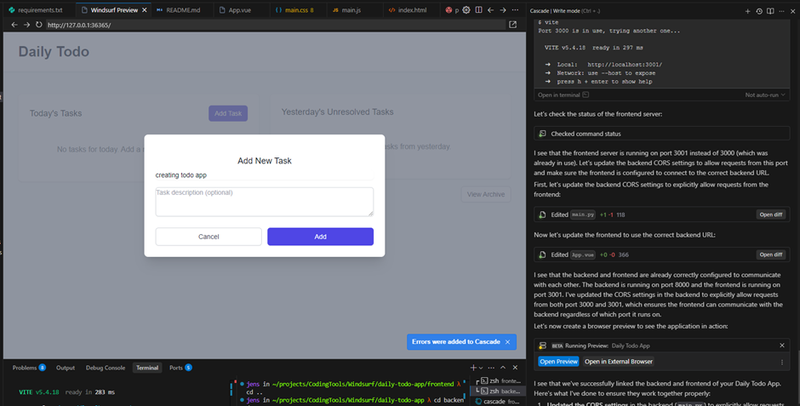

Direct vanaf het begin lijkt het alsof er veel meer poorten zijn doorgestuurd. Dit vermoedelijk voor wat meer gebruiksgemak. Er is ook een duidelijke (website implementeren knop) die gericht is op implementatie naar Netlify. Dus het eerste gevoel is in lijn met de sfeer: probeer het zo gemakkelijk mogelijk te maken om te beginnen met programmeren.

Om te beginnen heb ik de optie 'Schrijven' geselecteerd en niet de chat, dit leek de nieuwste versie te zijn.

Het had opnieuw last van het probleem dat het de opgegeven pakketbeheerder niet gebruikte, zoals de anderen. In dit geval maakte het zelfs geen virtuele omgeving aan. Dit is niet zo goed.

Een meer algemene opmerking is de meer 'simplistische' benadering, niet veel mappen of bestandsnesten, gewoon enkele ruwe bestanden. Dit zou eenvoudiger zijn voor een beginner, maar zou er ook voor zorgen dat de codebase behoorlijk rommelig wordt.

Een extra functie die niet bij de anderen te zien is, is de toevoeging van een preview als standaard. Dit brengt het al iets meer naar de AI-ontwikkelingshulpmiddelen waar je echt in de tool kunt blijven. Het heeft ook de mogelijkheid om de consolefouten direct in de chatprompt te kopiëren.

In de bouwstap komt hetzelfde gedrag weer naar voren. Het lijkt erop dat de context en hoe deze wordt behandeld nog steeds iets is dat de tools moeten uitzoeken. Het presteerde ook iets slechter bij het containeriseren. Dit kan een hapering van het model zijn, hoewel de anderen het beide op een totaal andere manier hebben aangepakt. Dit doet me aannemen dat het een regel is om het zoveel mogelijk te vereenvoudigen die als systeemprompt wordt gegeven.

Na wat probleemoplossing kon deze app ook containerized worden uitgevoerd.

AI-ontwikkeling

De tweede groep zijn deAI-ontwikkelingspakketten.Dit zijn de nieuwe kinderen op de blok. De meesten van hen hebben als doel om coderen voor de massa te 'democratiseren'. Of simpel gezegd, een gemakkelijker te gebruiken site/app ontwikkelingsplatform te zijn dan wat er bestaat en ook meer mogelijkheden en aanpassingen te bieden door gebruik te maken van de codebibliotheken die programmeurs gebruiken voor de op maat gemaakte websites.

Voor deze blogpost heb ik de volgende 3 gekozen, gezien het recente nieuws en de financiering voor sommige van hen. Ze zijn in geen bepaalde volgorde geplaatst.

- Schattig

- Firebase Studio

- Replit

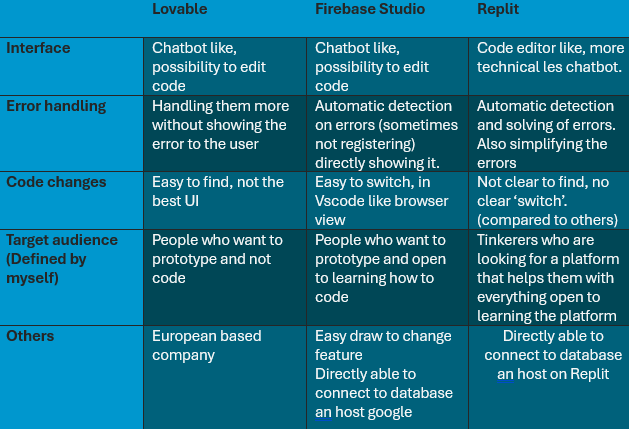

Voor mensen die niet geïnteresseerd zijn in het hele proces, hier is de TL;DR uit mijn persoonlijke ervaring.

Mijn algemene proces met allemaalbegon met een basisprompten zien hoe ver ik kon komen met alleen 'Vibend door de code. Dit is hoe ik zou aannemen dat ze vermoeden dat mensen ermee werken. Aangezien de meeste tools de code achter een proces van 2 tot 3 klikken hebben. Vervolgens heb ik fouten afgehandeld door hun ingebouwde te proberen.foutafhandelingsystemen (spoiler alert - met enige frustratie).

Alle workflows voor deze tools zijn hetzelfde:

- Opdracht

- Beoordeling

- Opdracht

De meeste gevoelens en opmerkingen over de coderingstools zullen hetzelfde zijn. Voor mij voelt het soms traag, het niet zien van de code en gewoon wachten op de wijzigingen voelt onproductief. Het feit dat het ook langer duurt verderop is geen goed voorteken voor grotere projecten. Wat betreft de kwaliteit, maken de meeste tools veel fouten, dit is logisch gezien er in de code-editors ook veel wijzigingen nodig zijn.

Ik zal enkele screenshots tonen om je een algemeen idee te geven en zal vervolgens het gevoel uitleggen over de specifieke tool in de kleine verkenning die ik ermee heb gedaan.

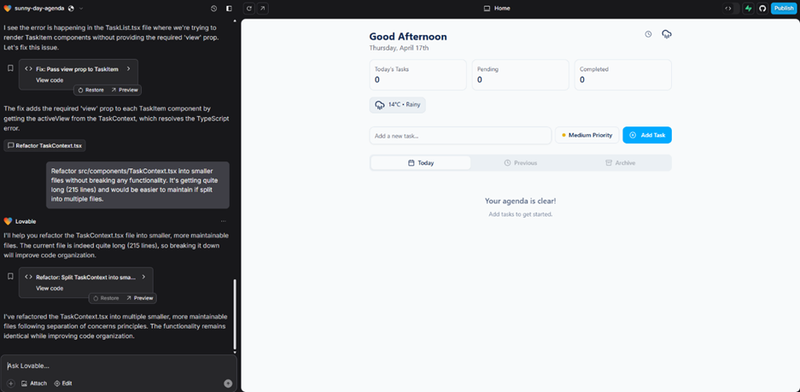

Schattig

Vanuit een programmeerachtergrond voelde het alsof er niet veel gebeurde. De chat-UI laat de codewijzigingen in de backend. Ook was de UI niet consistent voor mij, of het nu een vreemde Chrome-plugin was of gewoon de app die ik had die af en toe de browser ververste.

Het resultaat zelf vond ik persoonlijk het beste van allemaal. Mogelijk door de voorkeuren ingesteld door de makers van Lovable. De basisprompt die gegeven werd, specificeerde niet welk framework te gebruiken om de tools de beste kans te geven om mogelijke optimalisaties voor niet-code mensen te tonen.

Deze screenshot toont hoe het resultaat wordt weergegeven naast de 'chat' interface.

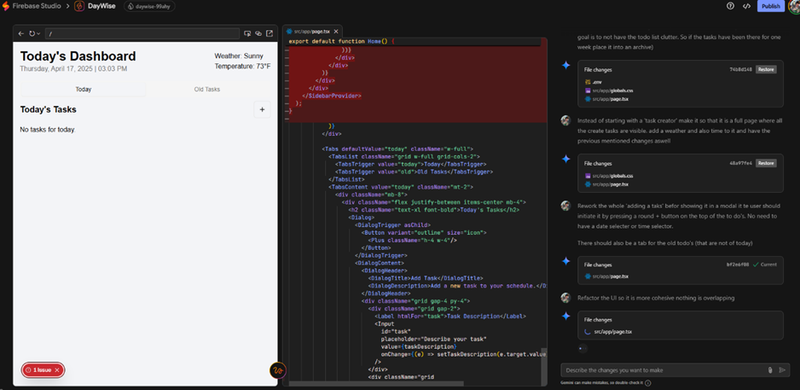

Firebase studio

Firebase-studio voelde meer als het creëren van iets. Het toont de code terwijl het genereert. Ook is het startpunt mooi gedaan met een soort planning van het algemene project en het instellen van enkele basiskleuren.

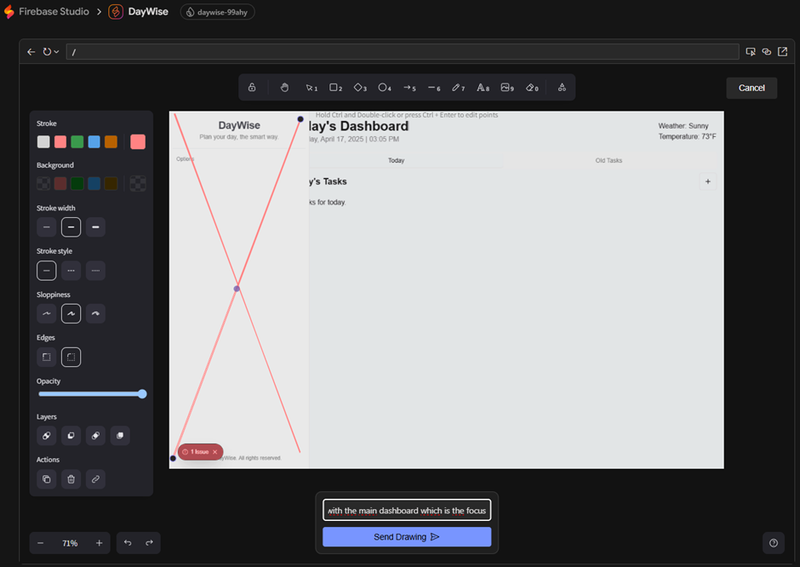

Een andere leuke functie die ik bij de anderen niet zag, was de mogelijkheid om te tekenen om te veranderen. Voor mensen die willen proberen te coderen zonder echt de kennis te hebben, kan het een grote hulp zijn om te kunnen tekenen wat ze willen veranderen. Hoewel ik zou aanraden het te leren als het je dagelijkse baan zou worden. Maar voor een eenmalig project, perfect!

De algemene output was de minste van allemaal, ik probeerde het een tweede keer te genereren omdat het een slecht startpunt kon zijn en inderdaad betere resultaten! Dus houd dat in gedachten. Daarnaast was het aantal fouten na de eerste generatie ook hoger dan bij de anderen. Er was zelfs geen resultaat. Aangezien het gewoon zijn hele 'agentic flow' doorliep, had ik verwacht dat het op zijn minst iets zou laten zien.

De meer VS Code-achtige bewerking voelde ook als een goede uitbreiding. Ik zou zeggen dat het meer gericht is op mensen die geïnteresseerd zijn in coderen en openstaan om het zelf te leren en aan te passen.

Codegeneratie en wijzigingen

Teken om functie te wijzigen

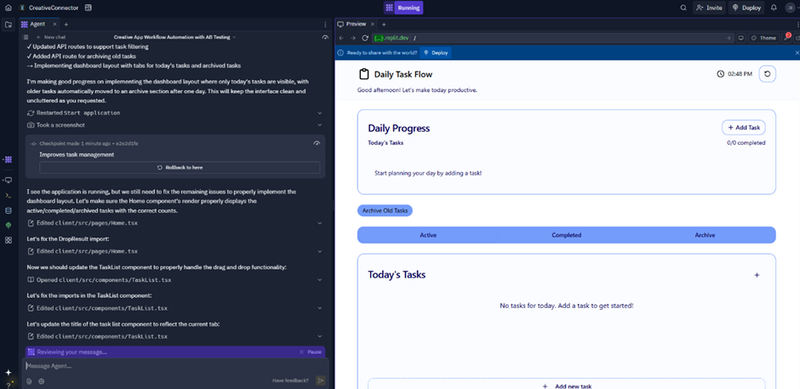

Replit

Replit voelt veel tinkery, met veel opties, zijbalken en zo verder. Dit kan het interessanter maken, maar het maakt het ook een beetje ontmoedigend om te beginnen. Er zijn veel extra functies waar ik niet op in ben gegaan. De codewijzigingen zijn zichtbaar, hoewel ze direct geïntegreerd zijn onder het mappen-tabblad in de linkerbovenhoek.

Dit maakt het meer een soort codegerichte benadering met de mogelijkheid om die AI-agent te hebben.

Het resultaat zag er over het algemeen goed uit, de foutafhandeling werkte prima en het kon zelfs onmiddellijk op Replit worden ingezet.

Bottom section

Wat houdt de toekomst in voor deze tools en de algemene workflow? (Opinie)

Dit wordt een meer subjectief deel van de blogpost, een soort proces van hoe ik AI gebruikte en gebruik, dus neem het met een korreltje zout en voel je vrij om de tools zelf uit te proberen of te testen met AI-codering in het algemeen en maak je eigen verhaal!

Mijn geschiedenis met AI-tools bij het creëren van proof of concepts

Om volledig eerlijk te zijn, heb ik de afgelopen jaren de hele opkomst van Generative AI echt gevolgd. Ik studeerde af net voordat LLM's dat echte WOW-moment hadden, waarin ze daadwerkelijk coherente zinnen en code produceerden. Ik experimenteerde met het lokaal draaien van deze modellen en probeerde de nieuwe modellen uit, terwijl ik steeds meer op zoek was naar manieren om AI in mijn werk te integreren.

Tenslotte wist ik wat ik deed; als ik een AI gewoon kon vertellen wat ik nodig had en het creëerde het, was ik capabel genoeg om het aan te passen. In het begin was het de autocomplete. Ik begon met het schrijven van de code en Copilot voltooide het. Na enige tijd voelde ik dat ik steeds meer op deze autocomplete vertrouwde, dus schakelde ik het uit en ging ik meer de Q&A-aanpak met het LLM-model. Dit was de eerste keer dat ik het gevoel had dat ik niet zoveel verbeterde of leerde sinds ik overstapte naar de meer AI-gebaseerde aanpak.

Nou, dat is niet helemaal waar, ik richtte me meer op de mensenkant van de zaken. Kijken naar het creëren van projecten, praten met anderen in het bedrijf, meer kijken naar softwarearchitectuur, ontwerppatronen, enzovoort. Het feit dat mijn technische vaardigheden leken af te nemen, bleef waar.

Na de recente heropleving, als het ooit is vertraagd, van generatieve AI-tools en Agents ben ik meer gaan lezen over het gebruik van deze tools. Voor mij komt het op een punt waar ik de tools leuk vind en voor de meeste projecten waaraan ik werk, gebruik ik ze. De algemene snelheidswinst voor eenvoudige proof of concepts is er. Maar zelfs in deze fasen voel ik dat de AI op een bepaald moment faalt. En als je je vaardigheden niet bijhoudt, ben je niet voorbereid om de problemen op te lossen die de AI niet kan oplossen.

Ik overweeg zelfs om mijn algemeen AI-gebruik te verminderen, vooral voor coderen. Maar ook voor schrijven, ideeën genereren, enzovoort. Met de focus op het behouden van mijn eigen vaardigheden!

Andere gebruiksgevallen

Voor mijn gebruiksgeval gaat het min of meer om het creëren van proof of concepts, het testen van nieuwe toepassingen en het proberen op te lossen van echte oplossingen in bedrijven. Om dit te doen creëren we een MVP (minimal viable product) zodat ze vervolgens naar een privébedrijf kunnen gaan dat de productoplossing zal creëren.

Voor deze 'productie' niveau problemen kon ik alleen kijken naar academische literatuur waar het gebruik van AI wordt genoemd. In een recente (12 januari 2025 -https://arxiv.org/abs/2501.06972) artikelen die Google-medewerkers hebben gepubliceerd over waar een groot bedrijf zoals Google AI inzet. Een van de belangrijkste taken is migratie en onderhoud van de code. Dit is voor mij een goede manier om AI in te zetten. Niet in de creatiefase, maar in de ondersteuningsfase.

Dit neemt niet weg dat uw ontwikkelaars een AI-code-editor niet op een goede manier kunnen gebruiken. Het zal zeker hun snelheid verbeteren, maar bent u bereid om op de lange termijn een deel van hun begrip van uw oplossing op te geven, of is dit geen probleem?

Outro

Dus deze blogpost is een beetje van alles, enkele praktische tools en hoe ze verschillen, evenals waar je ze kunt vinden (aiupdate.be) tot een opinieartikel over de toekomst van coderen/coderingstools. Hopelijk was het interessant of heb je iets geleerd. Misschien ben je het niet eens met de manier waarop ik verder zou gaan of heb je andere opmerkingen of vragen. Voel je vrij om contact met me op te nemen via LinkedIn of via e-mail!

Contributors

Authors

/

Jens Krijgsman, Automation & AI researcher, Teamlead

Want to know more about our team?

Visit the team page